Readme

ResAdapter Model Card

Project Page | Paper | Code | Gradio demo | ComfyUI Extension

Introduction

We propose ResAdapter, a plug-and-play resolution adapter for enabling any diffusion model generate resolution-free images: no additional training, no additional inference and no style transfer.

Usage

We provide a standalone example code to help you quickly use resadapter with diffusion models.

Comparison examples (640x384) between resadapter and dreamshaper-xl-1.0. Top: with resadapter. Bottom: without resadapter.

# pip install diffusers, transformers, accelerate, safetensors, huggingface_hub

import torch

from torchvision.utils import save_image

from safetensors.torch import load_file

from huggingface_hub import hf_hub_download

from diffusers import AutoPipelineForText2Image, DPMSolverMultistepScheduler

generator = torch.manual_seed(0)

prompt = "portrait photo of muscular bearded guy in a worn mech suit, light bokeh, intricate, steel metal, elegant, sharp focus, soft lighting, vibrant colors"

width, height = 640, 384

# Load baseline pipe

model_name = "lykon-models/dreamshaper-xl-1-0"

pipe = AutoPipelineForText2Image.from_pretrained(model_name, torch_dtype=torch.float16, variant="fp16").to("cuda")

pipe.scheduler = DPMSolverMultistepScheduler.from_config(pipe.scheduler.config, use_karras_sigmas=True, algorithm_type="sde-dpmsolver++")

# Inference baseline pipe

image = pipe(prompt, width=width, height=height, num_inference_steps=25, num_images_per_prompt=4, output_type="pt").images

save_image(image, f"image_baseline.png", normalize=True, padding=0)

# Load resadapter for baseline

resadapter_model_name = "resadapter_v1_sdxl"

pipe.load_lora_weights(

hf_hub_download(repo_id="jiaxiangc/res-adapter", subfolder=resadapter_model_name, filename="pytorch_lora_weights.safetensors"),

adapter_name="res_adapter",

) # load lora weights

pipe.set_adapters(["res_adapter"], adapter_weights=[1.0])

pipe.unet.load_state_dict(

load_file(hf_hub_download(repo_id="jiaxiangc/res-adapter", subfolder=resadapter_model_name, filename="diffusion_pytorch_model.safetensors")),

strict=False,

) # load norm weights

# Inference resadapter pipe

image = pipe(prompt, width=width, height=height, num_inference_steps=25, num_images_per_prompt=4, output_type="pt").images

save_image(image, f"image_resadapter.png", normalize=True, padding=0)

For more details, please follow the instructions in our GitHub repository.

Models

| Models | Parameters | Resolution Range | Ratio Range | Links |

|---|---|---|---|---|

| resadapter_v2_sd1.5 | 0.9M | 128 <= x <= 1024 | 0.28 <= r <= 3.5 | Download |

| resadapter_v2_sdxl | 0.5M | 256 <= x <= 1536 | 0.28 <= r <= 3.5 | Download |

| resadapter_v1_sd1.5 | 0.9M | 128 <= x <= 1024 | 0.5 <= r <= 2 | Download |

| resadapter_v1_sd1.5_extrapolation | 0.9M | 512 <= x <= 1024 | 0.5 <= r <= 2 | Download |

| resadapter_v1_sd1.5_interpolation | 0.9M | 128 <= x <= 512 | 0.5 <= r <= 2 | Download |

| resadapter_v1_sdxl | 0.5M | 256 <= x <= 1536 | 0.5 <= r <= 2 | Download |

| resadapter_v1_sdxl_extrapolation | 0.5M | 1024 <= x <= 1536 | 0.5 <= r <= 2 | Download |

| resadapter_v1_sdxl_interpolation | 0.5M | 256 <= x <= 1024 | 0.5 <= r <= 2 | Download |

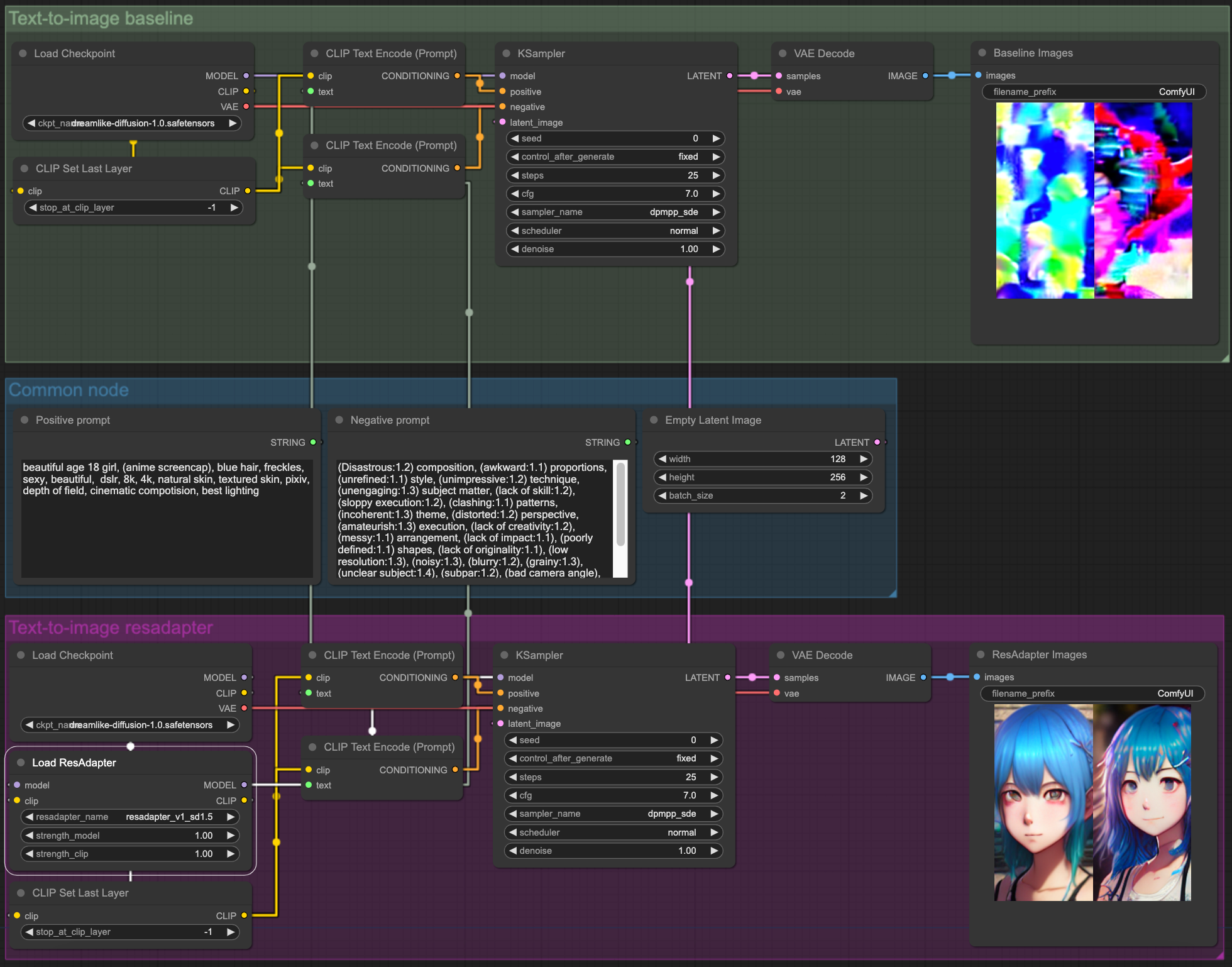

ComfyUI Extension

Text-to-Image

- workflow: resadapter_text_to_image_workflow.

- models: dreamlike-diffusion-1.0

ControlNet

- workflow: resadapter_controlnet_workflow.

- models: stable-diffusion-v1-5, sd-controlnet-canny

IPAdapter

- workflow: resadapter_ipadapter_workflow.

- models: stable-diffusion-v1-5, IP-Adapter

Accelerate LoRA

- workflow: resadapter_accelerate_lora_workflow.

- models: stable-diffusion-v1-5, lcm-lora-sdv1-5

Usage Tips

- If you are not satisfied with interpolation images, try to increase the alpha of resadapter to 1.0.

- If you are not satisfied with extrapolate images, try to choose the alpha of resadapter in 0.3 ~ 0.7.

- If you find the images with style conflicts, try to decrease the alpha of resadapter.

- If you find resadapter is not compatible with other accelerate lora, try to decrease the alpha of resadapter to 0.5 ~ 0.7.

Citation

If you find ResAdapter useful for your research and applications, please cite us using this BibTeX:

@article{cheng2024resadapter,

title={ResAdapter: Domain Consistent Resolution Adapter for Diffusion Models},

author={Cheng, Jiaxiang and Xie, Pan and Xia, Xin and Li, Jiashi and Wu, Jie and Ren, Yuxi and Li, Huixia and Xiao, Xuefeng and Zheng, Min and Fu, Lean},

booktitle={arXiv preprint arxiv:2403.02084},

year={2024}

}

For any question, please feel free to contact us via chengjiaxiang@bytedance.com or xiepan.01@bytedance.com.