🐣 Please follow me for new updates https://twitter.com/camenduru

🔥 Please join our discord server https://discord.gg/k5BwmmvJJU

🥳 Please join my patreon community https://patreon.com/camenduru

📋 Tutorial

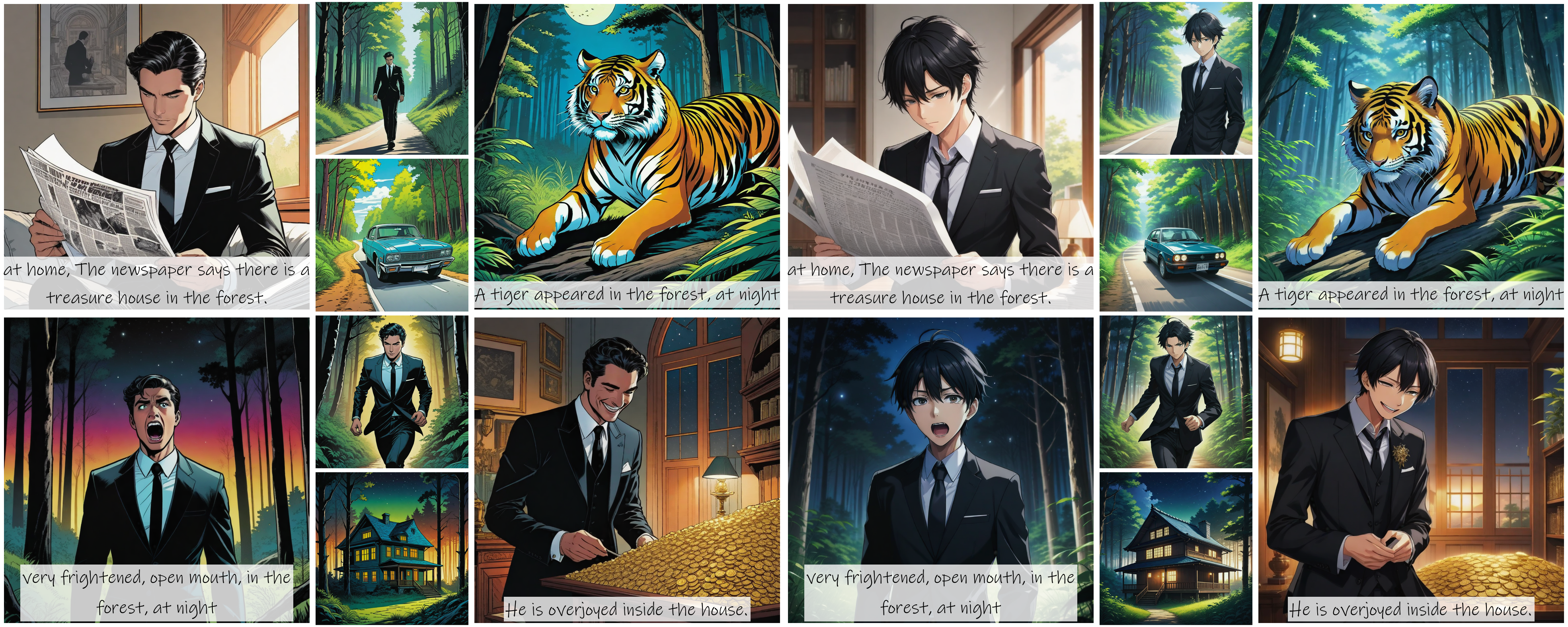

- Enter a Textual Description for Character, if you add the Ref-Image, making sure to follow the class word you want to customize with the trigger word: img, such as:

man imgorwoman imgorgirl img. - Enter the prompt array, each line corrsponds to one generated image.

- Choose your preferred style template.

- If you need to change the caption, add a # at the end of each line. Only the part after the # will be added as a caption to the image.)

- [NC] symbol (The [NC] symbol is used as a flag to indicate that no characters should be present in the generated scene images. If you want do that, prepend the “[NC]” at the beginning of the line. For example, to generate a scene of falling leaves without any character, write: “[NC] The leaves are falling.”), Currently, support is only using Textual Description

🕸 Replicate

https://replicate.com/camenduru/story-diffusion

🧬 Code

https://github.com/HVision-NKU/StoryDiffusion

📄 Paper

https://arxiv.org/abs/2405.01434

🌐 Page

https://storydiffusion.github.io/