Readme

CogAgent

Model page: https://huggingface.co/THUDM/cogagent-chat-hf

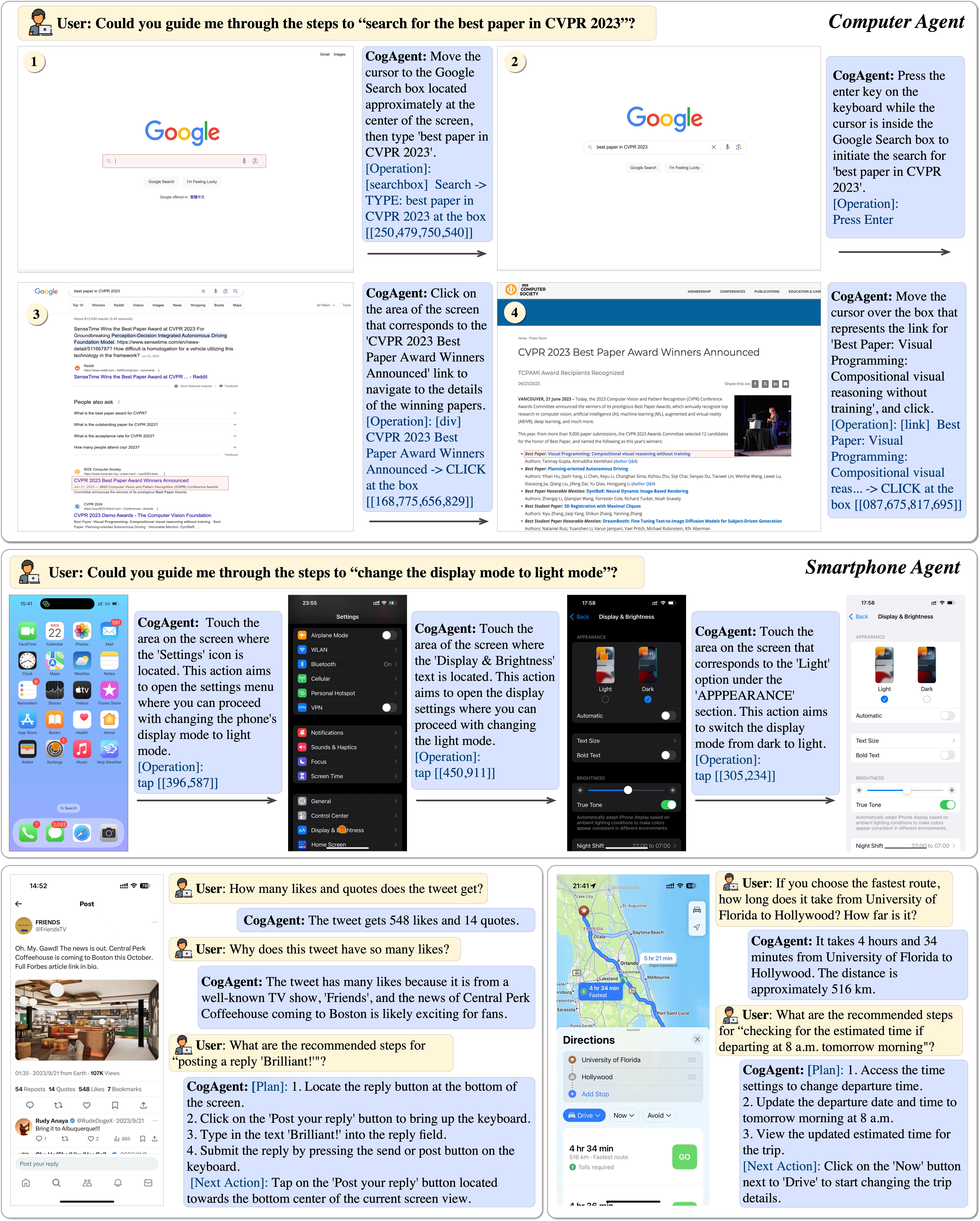

CogAgent is an open-source visual language model improved based on CogVLM. CogAgent-18B has 11 billion visual parameters and 7 billion language parameters

CogAgent-18B achieves state-of-the-art generalist performance on 9 classic cross-modal benchmarks, including VQAv2, OK-VQ, TextVQA, ST-VQA, ChartQA, infoVQA, DocVQA, MM-Vet, and POPE. It significantly surpasses existing models on GUI operation datasets such as AITW and Mind2Web.

In addition to all the features already present in CogVLM (visual multi-round dialogue, visual grounding), CogAgent:

-

Supports higher resolution visual input and dialogue question-answering. It supports ultra-high-resolution image inputs of 1120x1120.

-

Possesses the capabilities of a visual Agent, being able to return a plan, next action, and specific operations with coordinates for any given task on any GUI screenshot.

-

Enhanced GUI-related question-answering capabilities, allowing it to handle questions about any GUI screenshot, such as web pages, PC apps, mobile applications, etc.

-

Enhanced capabilities in OCR-related tasks through improved pre-training and fine-tuning.

Citation & Acknowledgements

If you find our work helpful, please consider citing the following papers

@misc{hong2023cogagent,

title={CogAgent: A Visual Language Model for GUI Agents},

author={Wenyi Hong and Weihan Wang and Qingsong Lv and Jiazheng Xu and Wenmeng Yu and Junhui Ji and Yan Wang and Zihan Wang and Yuxiao Dong and Ming Ding and Jie Tang},

year={2023},

eprint={2312.08914},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

In the instruction fine-tuning phase of the CogVLM, there are some English image-text data from the MiniGPT-4, LLAVA, LRV-Instruction, LLaVAR and Shikra projects, as well as many classic cross-modal work datasets. We sincerely thank them for their contributions.