Explore

Fine-tune FLUX fast

Customize FLUX.1 [dev] with the fast FLUX trainer on Replicate

Train the model to recognize and generate new concepts using a small set of example images, for specific styles, characters, or objects. It's fast (under 2 minutes), cheap (under $2), and gives you a warm, runnable model plus LoRA weights to download.

Featured models

runwayml / gen4-turbo

Generate 5s and 10s 720p videos fast

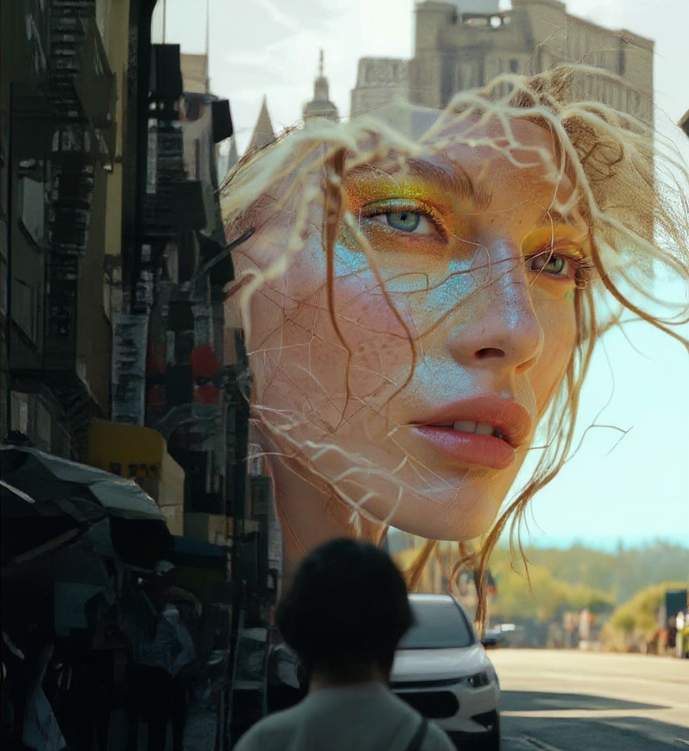

fofr / kontext-make-person-real

A FLUX Kontext fine-tune to fix plastic AI skin textures

google / veo-3-fast

A faster and cheaper version of Google’s Veo 3 video model, with audio

prunaai / wan-image

This model generates beautiful cinematic 2 megapixel images in 3-6 seconds and is derived from the wan2.1 model through optimisation techniques from the pruna package

bytedance / seededit-3.0

Text-guided image editing model that preserves original details while making targeted modifications like lighting changes, object removal, and style conversion

replicate / fast-flux-kontext-trainer

Fine-tune FLUX.1 Kontext to follow your own instructions

zsxkib / thinksound

Generate contextual audio from video using step-by-step reasoning🎶

minimax / hailuo-02

Hailuo 2 is a text-to-video and image-to-video model that can make 6s or 10s videos at 720p (standard) or 1080p (pro). It excels at real world physics.

ideogram-ai / ideogram-v3-turbo

Turbo is the fastest and cheapest Ideogram v3. v3 creates images with stunning realism, creative designs, and consistent styles

Official models

Official models are always on, maintained, and have predictable pricing.

I want to…

Generate images

Use AI To Generate Images & Photos with an API

Caption videos

Use AI To Caption Videos with an API

Generate speech

Convert text to speech

Use a face to make images

Make realistic images of people instantly

Generate videos

Use AI To Generate Videos with an API

Upscale images

Upscaling models that create high-quality images from low-quality images

Generate music

Use AI To Generate Music with an API

Edit images

Use AI To Edit Any Image with an API

Transcribe speech

Models that convert speech to text

Extract text from images

Optical character recognition (OCR) and text extraction

Remove backgrounds

Models that remove backgrounds from images and videos

Use the FLUX family of models

The FLUX family of text-to-image models from Black Forest Labs

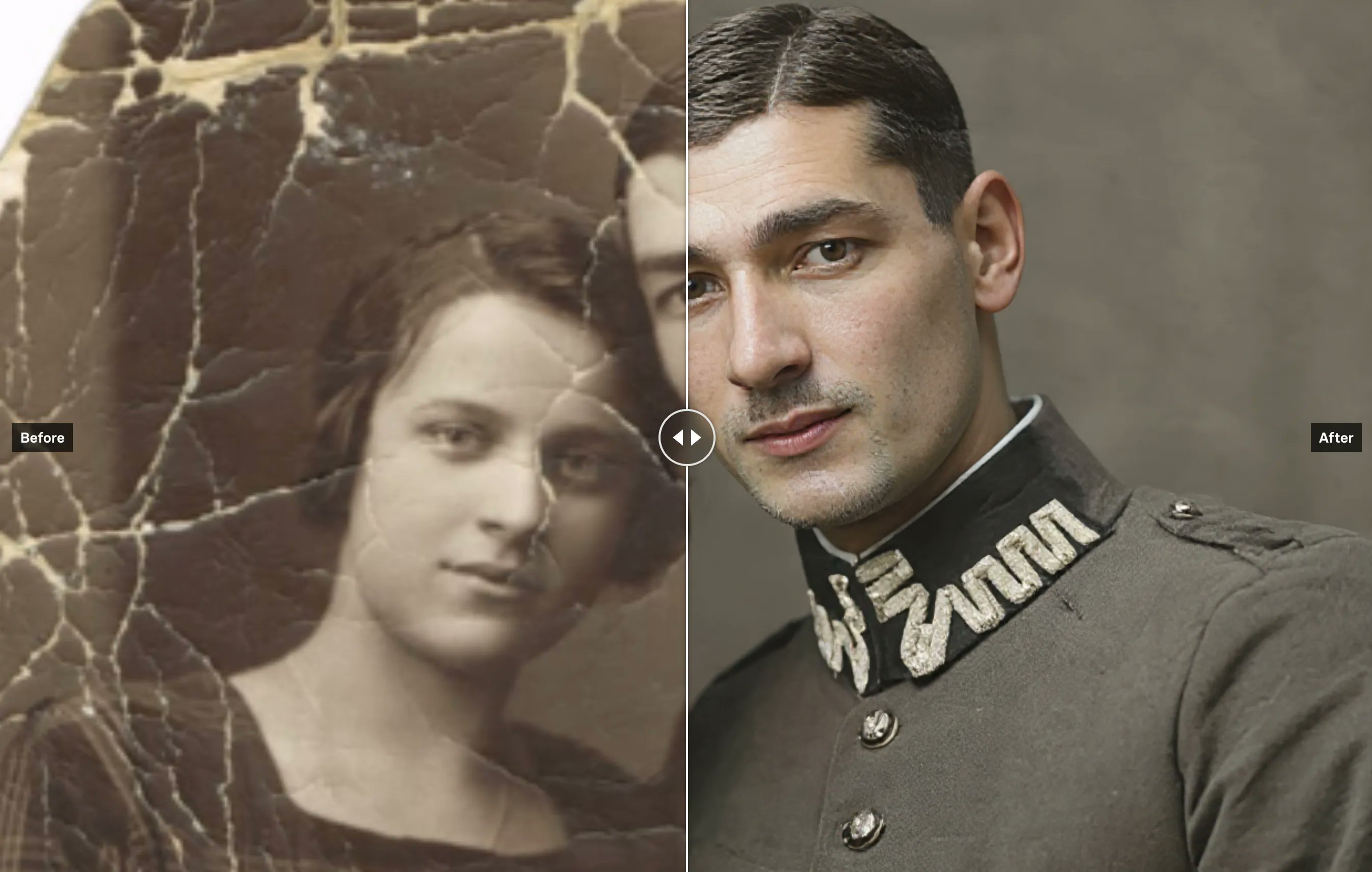

Restore images

Models that improve or restore images by deblurring, colorization, and removing noise

Enhance videos

Upscaling models that create high-quality video from low-quality videos

Use Kontext fine-tunes

Browse the diverse range of fine-tunes the community has custom-trained on Replicate

Caption images

Use AI To Caption Images with an API

Edit Videos

Tools for editing videos.

Chat with images

Ask language models about images

Use LLMs

Models that can understand and generate text

Make 3D stuff

Models that generate 3D objects, scenes, radiance fields, textures and multi-views.

Make videos with Wan2.1

Generate videos with Wan2.1, the fastest and highest quality open-source video generation model.

Use handy tools

Toolbelt-type models for videos and images.

Control image generation

Guide image generation with more than just text. Use edge detection, depth maps, and sketches to get the results you want.

Sing with voices

Voice-to-voice cloning and musical prosody

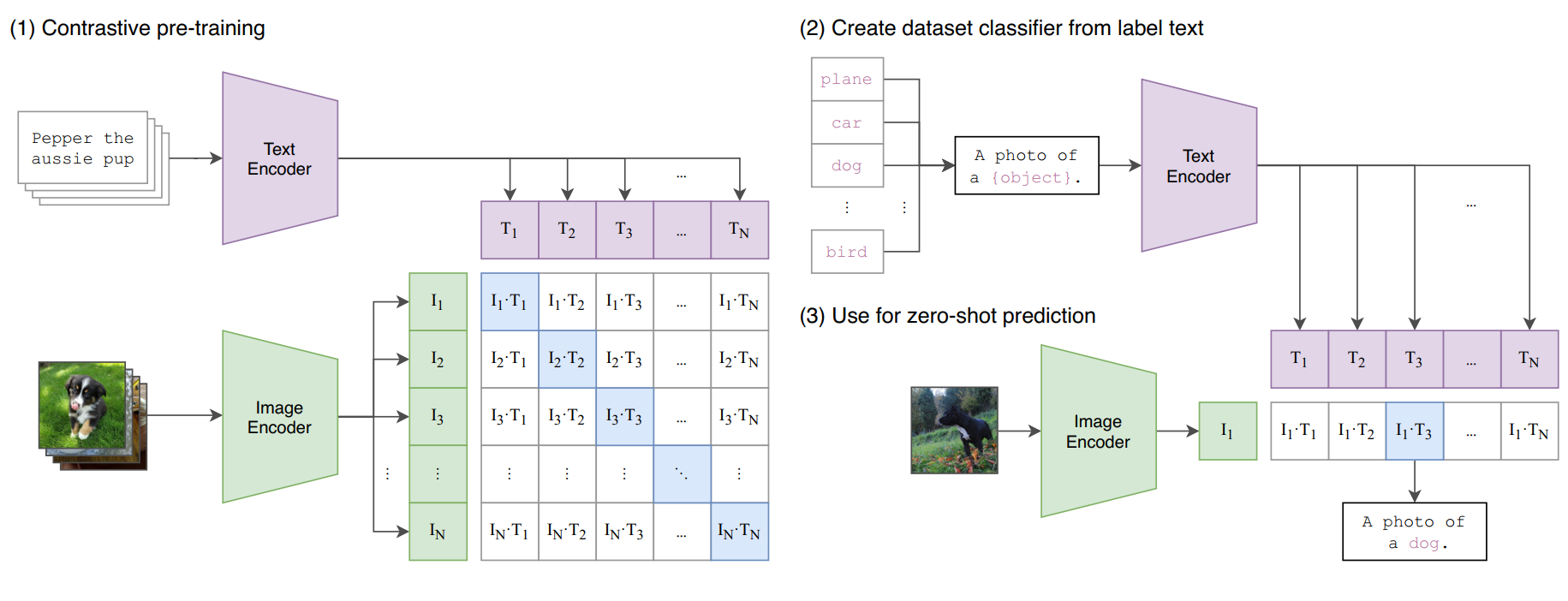

Get embeddings

Models that generate embeddings from inputs

Try for free

Get started with these models without adding a credit card. Whether you're making videos, generating images, or upscaling photos, these are great starting points.

Use official models

Official models are always on, maintained, and have predictable pricing.

Detect objects

Models that detect or segment objects in images and videos.

Use FLUX fine-tunes

Browse the diverse range of fine-tunes the community has custom-trained on Replicate

Popular models

This is the fastest Flux Dev endpoint in the world, contact us for more at pruna.ai

whisper-large-v3, incredibly fast, with video transcription

SDXL-Lightning by ByteDance: a fast text-to-image model that makes high-quality images in 4 steps

Return CLIP features for the clip-vit-large-patch14 model

whisper-large-v3, incredibly fast, powered by Hugging Face Transformers! 🤗

Generate CLIP (clip-vit-large-patch14) text & image embeddings

Latest models

Accelerated variant of Photon prioritizing speed while maintaining quality

This is a 3x faster FLUX.1 [schnell] model from Black Forest Labs, optimised with pruna with minimal quality loss. Contact us for more at pruna.ai

This is the fastest Flux Dev endpoint in the world, contact us for more at pruna.ai

Generate 5s and 9s 720p videos, faster and cheaper than Ray 2

Change the aspect ratio of any video up to 30 seconds long, outputs will be 720p

Generate 5s and 9s 540p videos, faster and cheaper than Ray 2

Fast, high quality text-to-video and image-to-video (Also known as Dream Machine)

Turn your image into a cartoon with FLUX.1 Kontext [pro]

FLUX Kontext max with list input for multiple images

An experimental model with FLUX Kontext Pro that can combine two input images

Use flux-kontext-pro to change the first or last frame of a video. Useful to use as inputs for restyling an entire video in a certain way

Generate native long-form video, with controllability

Experience impossible adventures and extreme scenarios from a single image

An experimental FLUX Kontext model that can combine two input images

Put yourself in an iconic location around the world from a single image

Use FLUX Kontext to restore, fix scratches and damage, and colorize old photos

Create a series of portrait photos from a single image

Create a professional headshot photo from any single image

A premium text-based image editing model that delivers maximum performance and improved typography generation for transforming images through natural language prompts

Quickly change someone's hair style and hair color, powered by FLUX.1 Kontext [pro]

A state-of-the-art text-based image editing model that delivers high-quality outputs with excellent prompt following and consistent results for transforming images through natural language

Make any car a flying car. This model users a kontext-dev lora and seedance-1-pro, which will be called in multiple predictions.

Transform a collection of images into a video slideshow

SANA-Sprint: One-Step Diffusion with Continuous-Time Consistency Distillation

Granite-speech-3.3-8b is a compact and efficient speech-language model, specifically designed for automatic speech recognition (ASR) and automatic speech translation (AST).

Granite-vision-3.3-2b is a compact and efficient vision-language model, specifically designed for visual document understanding, enabling automated content extraction from tables, charts, infographics, plots, diagrams, and more.

Hailuo 2: text-to-video and image-to-video model that can make 6s or 10s videos at 4k

FLUX.1 Kontext[dev] image editing model for running lora finetunes

Runway's Gen-4 Image model with references. Use up to 3 reference images to create the exact image you need. Capture every angle.

Compare kontext, gpt-image-1, runway gen4 and seededit for character based images

The Qwen3 Embedding model series is specifically designed for text embedding and ranking tasks