Explore

Fine-tune FLUX fast

Customize FLUX.1 [dev] with the fast FLUX trainer on Replicate

Train the model to recognize and generate new concepts using a small set of example images, for specific styles, characters, or objects. It's fast (under 2 minutes), cheap (under $2), and gives you a warm, runnable model plus LoRA weights to download.

Featured models

bytedance / seedance-1-pro

A pro version of Seedance that offers text-to-video and image-to-video support for 5s or 10s videos, at 480p and 1080p resolution

bytedance / seedance-1-lite

A video generation model that offers text-to-video and image-to-video support for 5s or 10s videos, at 480p and 720p resolution

kwaivgi / kling-v2.1

Use Kling v2.1 to generate 5s and 10s videos in 720p and 1080p resolution from a starting image (image-to-video)

google / veo-3

Sound on: Google’s flagship Veo 3 text to video model, with audio

google / imagen-4-ultra

Use this ultra version of Imagen 4 when quality matters more than speed and cost

replicate / fast-flux-trainer

Train subjects or styles faster than ever

black-forest-labs / flux-kontext-pro

A state-of-the-art text-based image editing model that delivers high-quality outputs with excellent prompt following and consistent results for transforming images through natural language

black-forest-labs / flux-kontext-max

A premium text-based image editing model that delivers maximum performance and improved typography generation for transforming images through natural language prompts

ideogram-ai / ideogram-v3-turbo

Turbo is the fastest and cheapest Ideogram v3. v3 creates images with stunning realism, creative designs, and consistent styles

Official models

Official models are always on, maintained, and have predictable pricing.

I want to…

Generate images

Models that generate images from text prompts

Generate videos

Models that create and edit videos

Edit images

Tools for editing images.

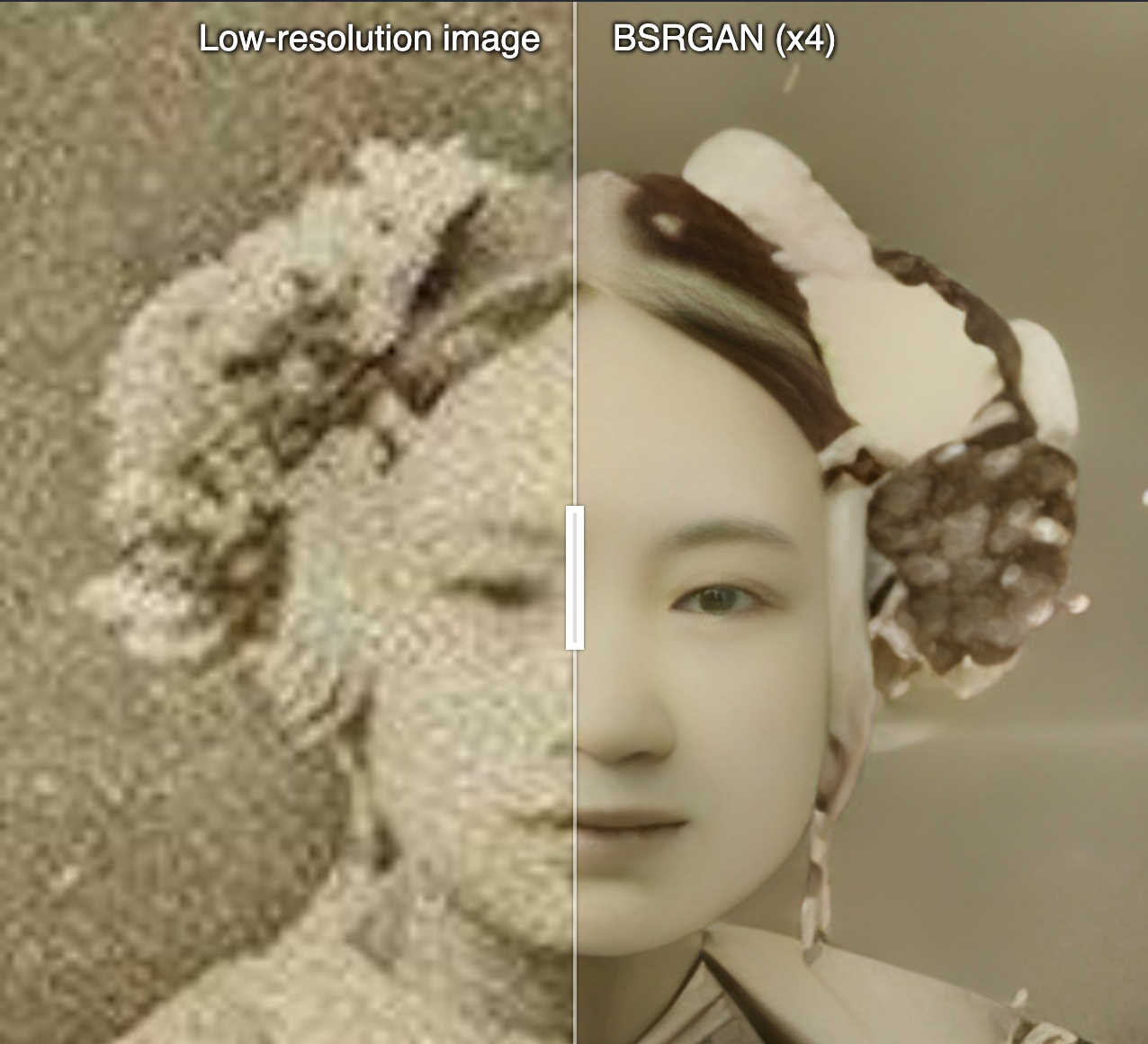

Upscale images

Upscaling models that create high-quality images from low-quality images

Generate speech

Convert text to speech

Transcribe speech

Models that convert speech to text

Use LLMs

Models that can understand and generate text

Caption videos

Models that generate text from videos

Make 3D stuff

Models that generate 3D objects, scenes, radiance fields, textures and multi-views.

Restore images

Models that improve or restore images by deblurring, colorization, and removing noise

Generate music

Models to generate and modify music

Caption images

Models that generate text from images

Make videos with Wan2.1

Generate videos with Wan2.1, the fastest and highest quality open-source video generation model.

Use handy tools

Toolbelt-type models for videos and images.

Control image generation

Guide image generation with more than just text. Use edge detection, depth maps, and sketches to get the results you want.

Extract text from images

Optical character recognition (OCR) and text extraction

Chat with images

Ask language models about images

Sing with voices

Voice-to-voice cloning and musical prosody

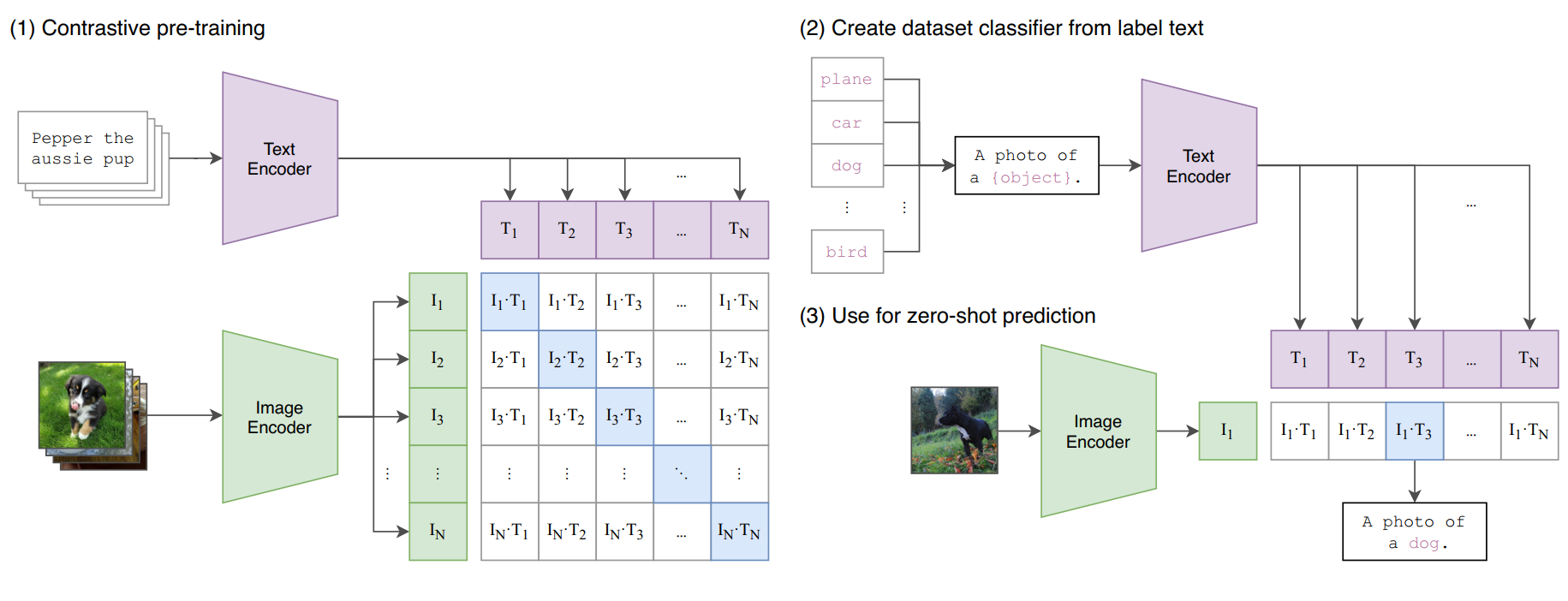

Get embeddings

Models that generate embeddings from inputs

Use a face to make images

Make realistic images of people instantly

Remove backgrounds

Models that remove backgrounds from images and videos

Try for free

Get started with these models without adding a credit card. Whether you're making videos, generating images, or upscaling photos, these are great starting points.

Use the FLUX family of models

The FLUX family of text-to-image models from Black Forest Labs

Use official models

Official models are always on, maintained, and have predictable pricing.

Enhance videos

Models that enhance videos with super-resolution, sound effects, motion capture and other useful production effects.

Detect objects

Models that detect or segment objects in images and videos.

Use FLUX fine-tunes

Browse the diverse range of fine-tunes the community has custom-trained on Replicate

Popular models

SDXL-Lightning by ByteDance: a fast text-to-image model that makes high-quality images in 4 steps

This is the fastest Flux Dev endpoint in the world, contact us for more at pruna.ai

Practical face restoration algorithm for *old photos* or *AI-generated faces*

Return CLIP features for the clip-vit-large-patch14 model

Generate CLIP (clip-vit-large-patch14) text & image embeddings

Latest models

Ultimate anime-themed finetuned SDXL model and the latest installment of the Animagine XL series

Interior Design with RealVisXL V5.0 and ControlNet (Depth & Union SDXL ProMax) to generate photorealistic, high-resolution interior designs with enhanced depth and structure.

STAR Video Upscaler: Spatial-Temporal Augmentation with Text-to-Video Models for Real-World Video Super-Resolution

Takes audio (mp3) and a "source-of-truth" audio transcript (string) as input and returns precise timestamps.

DeepSeek-R1 distilled on LLaMA3.3 70B and quantized by ollama

Customise your hair with AI. Swap hair with anyone, copy anyone's hair color.

A reasoning model trained with reinforcement learning, on par with OpenAI o1

Adapted to have multi-lora support also for schnell: https://replicate.com/lucataco/flux-dev-multi-lora

Run any ComfyUI workflow on an A100. Guide: https://github.com/fofr/cog-comfyui

Create a dotted waveform video from an audio file

A state-of-the-art text-to-video generation model capable of creating high-quality videos with realistic motion from text descriptions

This model classifies weather conditions based on images. It uses a Convolutional Neural Network (CNN) trained on various weather phenomena to predict the weather condition of a given image.

DeepSeek's first generation reasoning models with comparable performance to OpenAI-o1

Robust face restoration algorithm for old photos / AI-generated faces

The power of flux with the model trained on VITON-HD used for try-on on categories such as upper body, lower body and full body dresses

Change the strength of the prompt to enable editing style and content. Recommendation: keep the seed constant and tune the strength.