Explore

Fine-tune FLUX fast

Customize FLUX.1 [dev] with the fast FLUX trainer on Replicate

Train the model to recognize and generate new concepts using a small set of example images, for specific styles, characters, or objects. It's fast (under 2 minutes), cheap (under $2), and gives you a warm, runnable model plus LoRA weights to download.

Featured models

luma / reframe-video

Change the aspect ratio of any video up to 30 seconds long, outputs will be 720p

google / imagen-4-fast

Use this fast version of Imagen 4 when speed and cost are more important than quality

google / imagen-4-ultra

Use this ultra version of Imagen 4 when quality matters more than speed and cost

google / imagen-4

Google's Imagen 4 flagship model

replicate / fast-flux-trainer

Train subjects or styles faster than ever

google / veo-3

Sound on: Google’s flagship Veo 3 text to video model, with audio

black-forest-labs / flux-kontext-pro

A state-of-the-art text-based image editing model that delivers high-quality outputs with excellent prompt following and consistent results for transforming images through natural language

black-forest-labs / flux-kontext-max

A premium text-based image editing model that delivers maximum performance and improved typography generation for transforming images through natural language prompts

ideogram-ai / ideogram-v3-turbo

Turbo is the fastest and cheapest Ideogram v3. v3 creates images with stunning realism, creative designs, and consistent styles

Official models

Official models are always on, maintained, and have predictable pricing.

I want to…

Generate images

Models that generate images from text prompts

Make videos with Wan2.1

Generate videos with Wan2.1, the fastest and highest quality open-source video generation model.

Generate videos

Models that create and edit videos

Caption images

Models that generate text from images

Transcribe speech

Models that convert speech to text

Use the FLUX family of models

The FLUX family of text-to-image models from Black Forest Labs

Remove backgrounds

Models that remove backgrounds from images and videos

Restore images

Models that improve or restore images by deblurring, colorization, and removing noise

Caption videos

Models that generate text from videos

Edit images

Tools for manipulating images.

Use a face to make images

Make realistic images of people instantly

Get embeddings

Models that generate embeddings from inputs

Generate speech

Convert text to speech

Generate music

Models to generate and modify music

Generate text

Models that can understand and generate text

Use handy tools

Toolbelt-type models for videos and images.

Upscale images

Upscaling models that create high-quality images from low-quality images

Use official models

Official models are always on, maintained, and have predictable pricing.

Enhance videos

Models that enhance videos with super-resolution, sound effects, motion capture and other useful production effects.

Detect objects

Models that detect or segment objects in images and videos.

Sing with voices

Voice-to-voice cloning and musical prosody

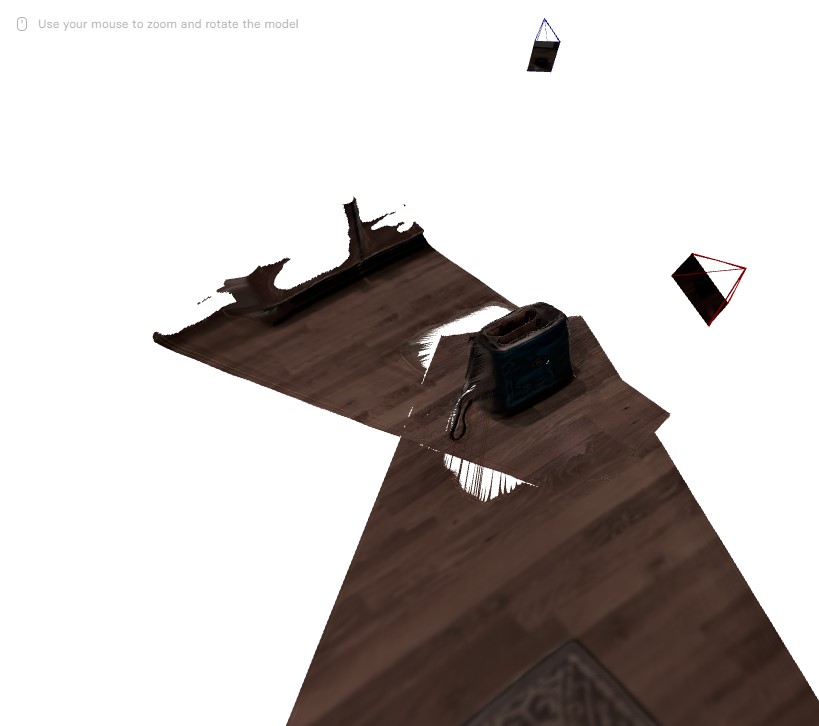

Make 3D stuff

Models that generate 3D objects, scenes, radiance fields, textures and multi-views.

Chat with images

Ask language models about images

Extract text from images

Optical character recognition (OCR) and text extraction

Use FLUX fine-tunes

Browse the diverse range of fine-tunes the community has custom-trained on Replicate

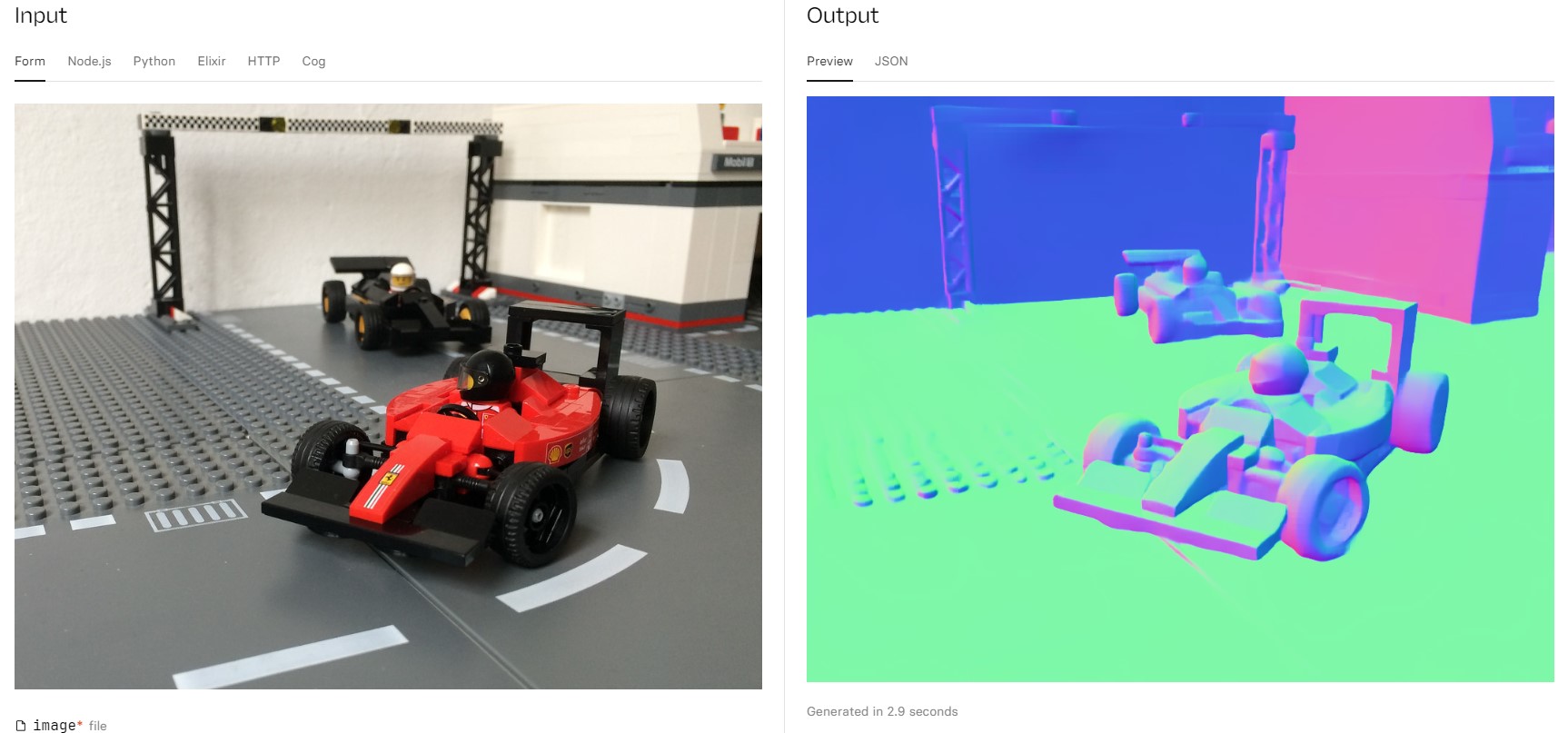

Control image generation

Guide image generation with more than just text. Use edge detection, depth maps, and sketches to get the results you want.

Popular models

SDXL-Lightning by ByteDance: a fast text-to-image model that makes high-quality images in 4 steps

This is an optimised version of the FLUX.1 [schnell] model from Black Forest Labs made with Pruna. We achieve a ~3x speedup over the original model with minimal quality loss.

Return CLIP features for the clip-vit-large-patch14 model

whisper-large-v3, incredibly fast, powered by Hugging Face Transformers! 🤗

🦙 LaMa: Resolution-robust Large Mask Inpainting with Fourier Convolutions

Practical face restoration algorithm for *old photos* or *AI-generated faces*

Latest models

Generate a model with a garment faster if you have a mask image

Find out how similar Japanese sentences are

Fast text-to-3D Gaussian generation by bridging 2D and 3D diffusion models

Yuan2.0 is a new generation LLM developed by IEIT System, enhanced the model's understanding of semantics, mathematics, reasoning, code, knowledge, and other aspects.

A diffusion-based method to enhance visual consistency for I2V generation

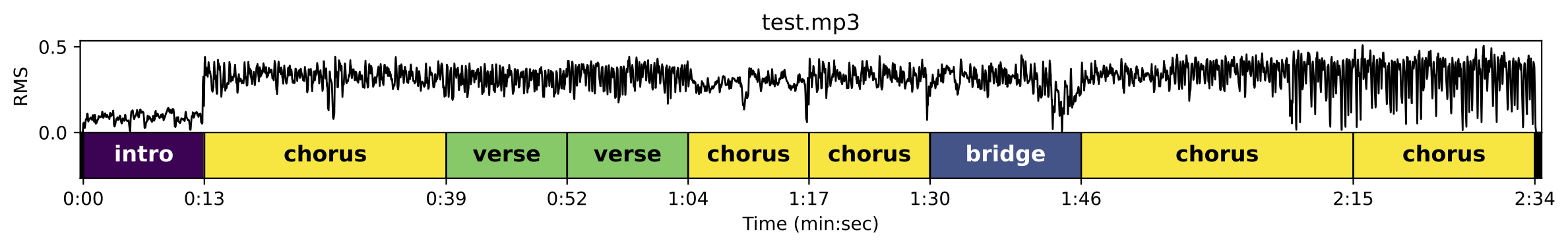

AI Music Structure Analyzer + Stem Splitter using Demucs & Mdx-Net with Python-Audio-Separator

SDXL lightning mult-controlnet, img2img & inpainting

dreamshaper-xl-lightning is a Stable Diffusion model that has been fine-tuned on SDXL

Lightweight multimodal model for visual question answering, reasoning and captioning

Function calling LLM that surpasses the state-of-the-art in function calling capabilities

OpenCodeInterpreter: Integrating Code Generation with Execution and Refinement

Segments an audio recording based on who is speaking

Practicing Model Scaling for Photo-Realistic Image Restoration In the Wild. This is the SUPIR-v0F model and does NOT use LLaVA-13b.

Practicing Model Scaling for Photo-Realistic Image Restoration In the Wild. This is the SUPIR-v0Q model and does NOT use LLaVA-13b.

Practicing Model Scaling for Photo-Realistic Image Restoration In the Wild. This version uses LLaVA-13b for captioning.

POC implementation of Depth-anything to produce a 3D SBS video

ProteusV0.4: The Style Update - enhances stylistic capabilities, similar to Midjourney's approach, rather than advancing prompt comprehension

A collection of anime stable diffusion models with VAEs and LORAs.