Explore

Fine-tune FLUX fast

Customize FLUX.1 [dev] with the fast FLUX trainer on Replicate

Train the model to recognize and generate new concepts using a small set of example images, for specific styles, characters, or objects. It's fast (under 2 minutes), cheap (under $2), and gives you a warm, runnable model plus LoRA weights to download.

Featured models

bytedance / seedance-1-pro

A pro version of Seedance that offers text-to-video and image-to-video support for 5s or 10s videos, at 480p and 1080p resolution

black-forest-labs / flux-kontext-max

A premium text-based image editing model that delivers maximum performance and improved typography generation for transforming images through natural language prompts

black-forest-labs / flux-kontext-pro

A state-of-the-art text-based image editing model that delivers high-quality outputs with excellent prompt following and consistent results for transforming images through natural language

runwayml / gen4-image

Runway's Gen-4 Image model with references. Use up to 3 reference images to create the exact image you need. Capture every angle.

black-forest-labs / flux-kontext-dev

Open-weight version of FLUX.1 Kontext

bytedance / seedream-3

A text-to-image model with support for native high-resolution (2K) image generation

bytedance / seedance-1-lite

A video generation model that offers text-to-video and image-to-video support for 5s or 10s videos, at 480p and 720p resolution

kwaivgi / kling-v2.1

Use Kling v2.1 to generate 5s and 10s videos in 720p and 1080p resolution from a starting image (image-to-video)

ideogram-ai / ideogram-v3-quality

The highest quality Ideogram v3 model. v3 creates images with stunning realism, creative designs, and consistent styles

Official models

Official models are always on, maintained, and have predictable pricing.

I want to…

Generate images

Models that generate images from text prompts

Generate videos

Models that create and edit videos

Edit images

Tools for editing images.

Upscale images

Upscaling models that create high-quality images from low-quality images

Generate speech

Convert text to speech

Transcribe speech

Models that convert speech to text

Use LLMs

Models that can understand and generate text

Caption videos

Models that generate text from videos

Make 3D stuff

Models that generate 3D objects, scenes, radiance fields, textures and multi-views.

Restore images

Models that improve or restore images by deblurring, colorization, and removing noise

Generate music

Models to generate and modify music

Caption images

Models that generate text from images

Make videos with Wan2.1

Generate videos with Wan2.1, the fastest and highest quality open-source video generation model.

Use handy tools

Toolbelt-type models for videos and images.

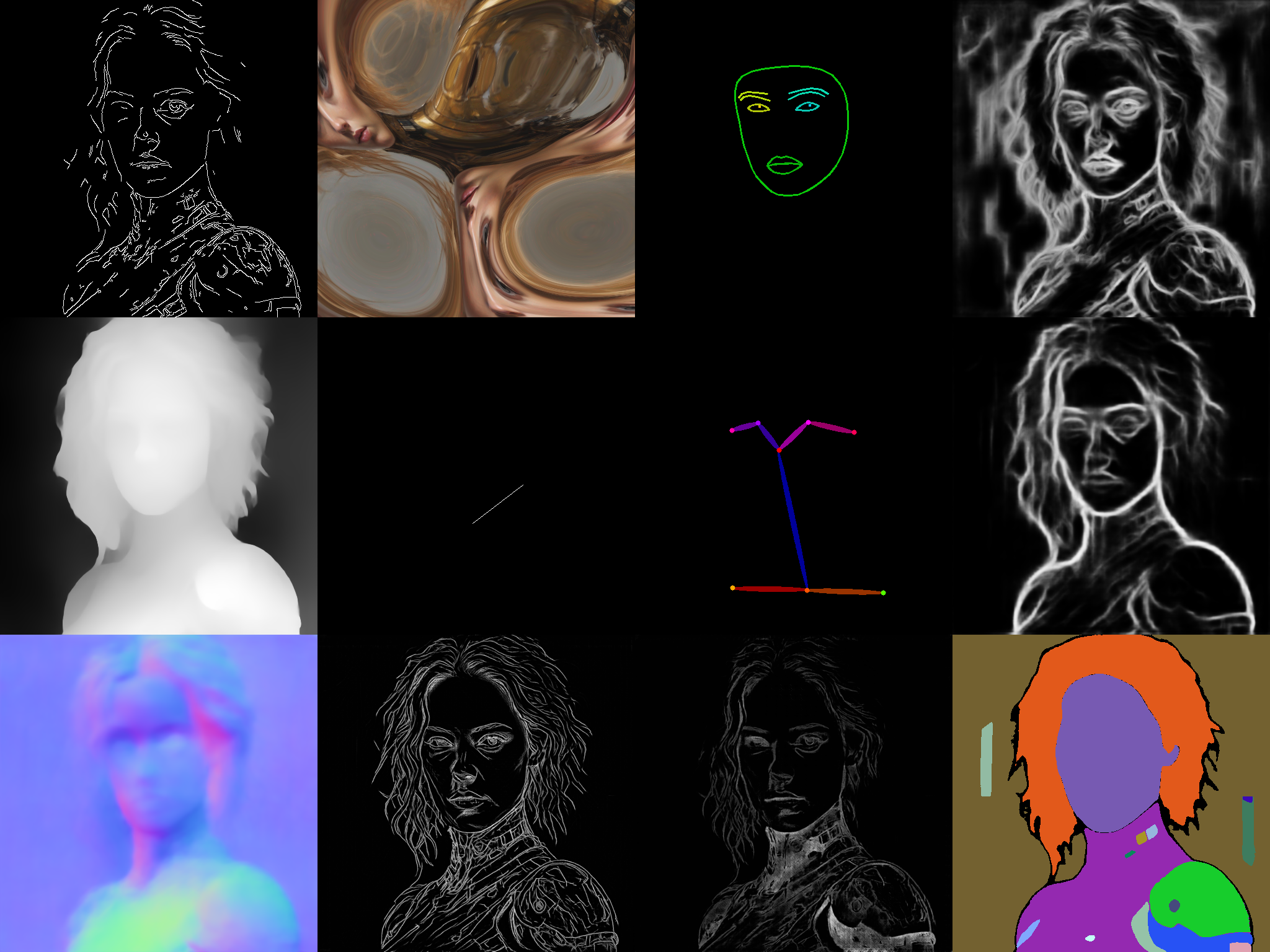

Control image generation

Guide image generation with more than just text. Use edge detection, depth maps, and sketches to get the results you want.

Extract text from images

Optical character recognition (OCR) and text extraction

Chat with images

Ask language models about images

Sing with voices

Voice-to-voice cloning and musical prosody

Get embeddings

Models that generate embeddings from inputs

Use a face to make images

Make realistic images of people instantly

Remove backgrounds

Models that remove backgrounds from images and videos

Try for free

Get started with these models without adding a credit card. Whether you're making videos, generating images, or upscaling photos, these are great starting points.

Use the FLUX family of models

The FLUX family of text-to-image models from Black Forest Labs

Use official models

Official models are always on, maintained, and have predictable pricing.

Enhance videos

Models that enhance videos with super-resolution, sound effects, motion capture and other useful production effects.

Detect objects

Models that detect or segment objects in images and videos.

Use FLUX fine-tunes

Browse the diverse range of fine-tunes the community has custom-trained on Replicate

Popular models

SDXL-Lightning by ByteDance: a fast text-to-image model that makes high-quality images in 4 steps

Return CLIP features for the clip-vit-large-patch14 model

Run any ComfyUI workflow. Guide: https://github.com/replicate/cog-comfyui

This is the fastest Flux Dev endpoint in the world, contact us for more at pruna.ai

Practical face restoration algorithm for *old photos* or *AI-generated faces*

whisper-large-v3, incredibly fast, powered by Hugging Face Transformers! 🤗

Latest models

Create song covers with any RVC v2 trained AI voice from audio files.

A combination of ip_adapter SDv1.5 and mediapipe-face to inpaint a face

The Yi series models are large language models trained from scratch by developers at 01.AI.

The Yi series models are large language models trained from scratch by developers at 01.AI.

The Yi series models are large language models trained from scratch by developers at 01.AI.

Custom improvements like a custom callback to enhance the inference | It's a WIP and it may causes some wrong outputs

An extremely fast all-in-one model to use LCM with SDXL, ControlNet and custom LoRA url's!

Create variations of an uploaded image. Please see README for more details

Source: meta-llama/Llama-2-7b-chat-hf ✦ Quant: TheBloke/Llama-2-7B-Chat-AWQ ✦ Intended for assistant-like chat

Source: meta-math/MetaMath-Mistral-7B ✦ Quant: TheBloke/MetaMath-Mistral-7B-AWQ ✦ Bootstrap Your Own Mathematical Questions for Large Language Models

Source: Severian/ANIMA-Phi-Neptune-Mistral-7B ✦ Quant: TheBloke/ANIMA-Phi-Neptune-Mistral-7B-AWQ ✦ Biomimicry Enhanced LLM

Animate Your Personalized Text-to-Image Diffusion Models (Long boot times!)

Latent Consistency Model (LCM): SDXL, distills the original model into a version that requires fewer steps (4 to 8 instead of the original 25 to 50)

Generate high-quality images faster with Latent Consistency Models (LCM), a novel approach that distills the original model, reducing the steps required from 25-50 to just 4-8 in Stable Diffusion (SDXL) image generation.

The image prompt adapter is designed to enable a pretrained text-to-image diffusion model to generate SDXL images with an image prompt

Canny, soft edge, depth, lineart, segmentation, pose, etc

POC of SDXL-LCM LoRA combined with a Replicate LoRA for 4 second inference time

The image prompt adapter is designed to enable a pretrained text-to-image diffusion model to generate SDXL images with an image prompt

Latent Consistency Model (LCM): SSD-1B, is a LCM distilled version that reduces the number of inference steps needed to only 2 - 8 steps

OpenChat: Advancing Open-source Language Models with Mixed-Quality Data

The image prompt adapter is designed to enable a pretrained text-to-image diffusion model to generate SDv1.5 images with an image prompt

Segmind Stable Diffusion Model (SSD-1B) is a distilled 50% smaller version of SDXL, offering a 60% speedup while maintaining high-quality text-to-image generation capabilities

Batch mode for Segmind Stable Diffusion Model (SSD-1B) txt2img