fofr/hunyuan-take-on-me

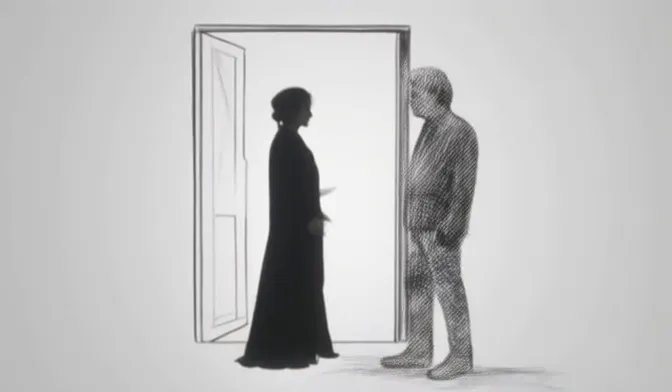

Hunyuan fine-tuned on 3s clips from A-ha's Take on Me music video, use TKONME trigger

Public

65

runs