InstructIR ✏️🖼️

High-Quality Image Restoration Following Human Instructions

Computer Vision Lab, University of Wuerzburg | Sony PlayStation, FTG

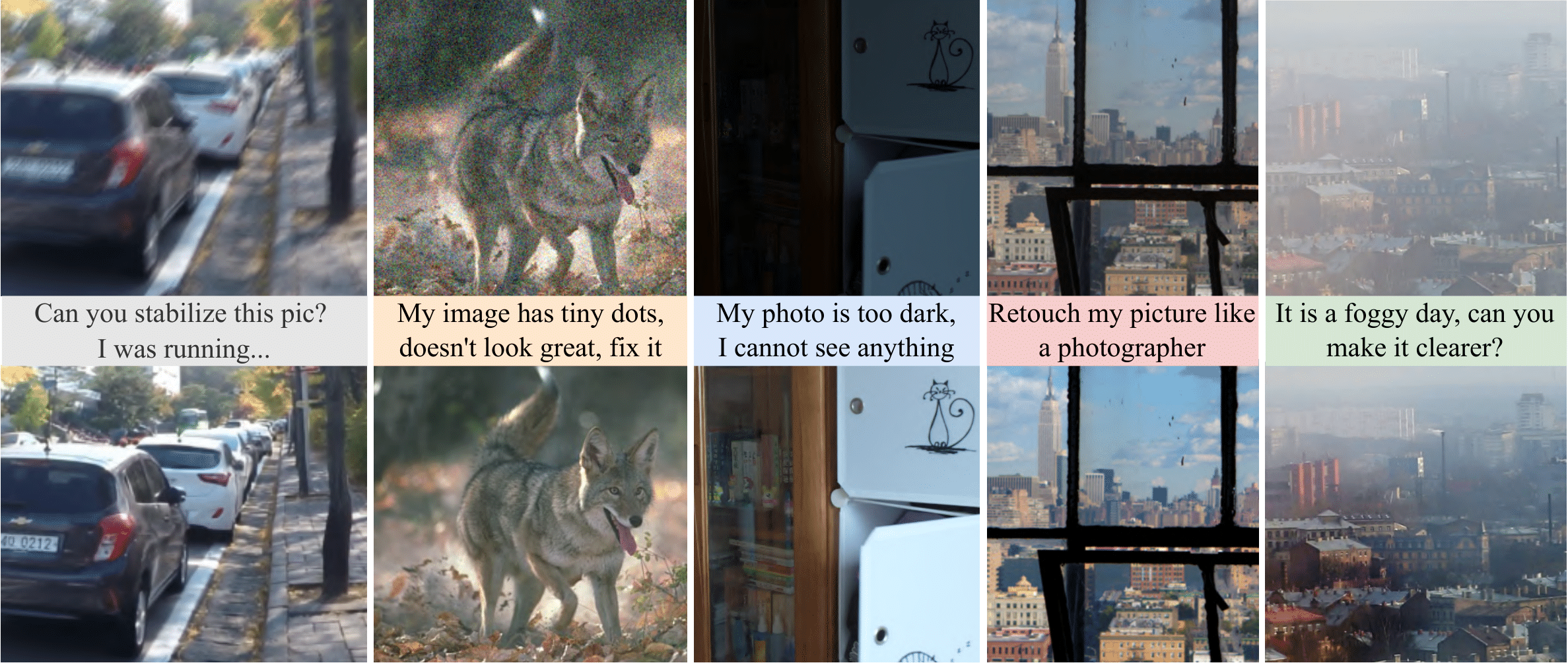

InstructIR takes as input an image and a human-written instruction for how to improve that image. The neural model performs all-in-one image restoration. InstructIR achieves state-of-the-art results on several restoration tasks including image denoising, deraining, deblurring, dehazing, and (low-light) image enhancement.

Image restoration is a fundamental problem that involves recovering a high-quality clean image from its degraded observation. All-In-One image restoration models can effectively restore images from various types and levels of degradation using degradation-specific information as prompts to guide the restoration model. In this work, we present the first approach that uses human-written instructions to guide the image restoration model. Given natural language prompts, our model can recover high-quality images from their degraded counterparts, considering multiple degradation types. Our method, InstructIR, achieves state-of-the-art results on several restoration tasks including image denoising, deraining, deblurring, dehazing, and (low-light) image enhancement. InstructIR improves +1dB over previous all-in-one restoration methods. Moreover, our dataset and results represent a novel benchmark for new research on text-guided image restoration and enhancement.

Acknowledgments

This work was partly supported by the The Humboldt Foundation (AvH). Marcos Conde is also supported by Sony Interactive Entertainment, FTG.

This work is inspired in InstructPix2Pix.

Contacts

For any inquiries contact Marcos V. Conde: marcos.conde [at] uni-wuerzburg.de

Citation BibTeX

@misc{conde2024instructir,

title={High-Quality Image Restoration Following Human Instructions},

author={Marcos V. Conde, Gregor Geigle, Radu Timofte},

year={2024},

journal={arXiv preprint},

}