StyleCLIPDraw

Peter Schaldenbrand, Zhixuan Liu, Jean Oh September 2021

Featured at the 2021 NeurIPS Workshop on Machine Learning and Design. ArXiv pre-print.

Note: This is a version of StyleCLIPDraw that is optimized for short runtime. As such, the results will not be exactly like the original model.

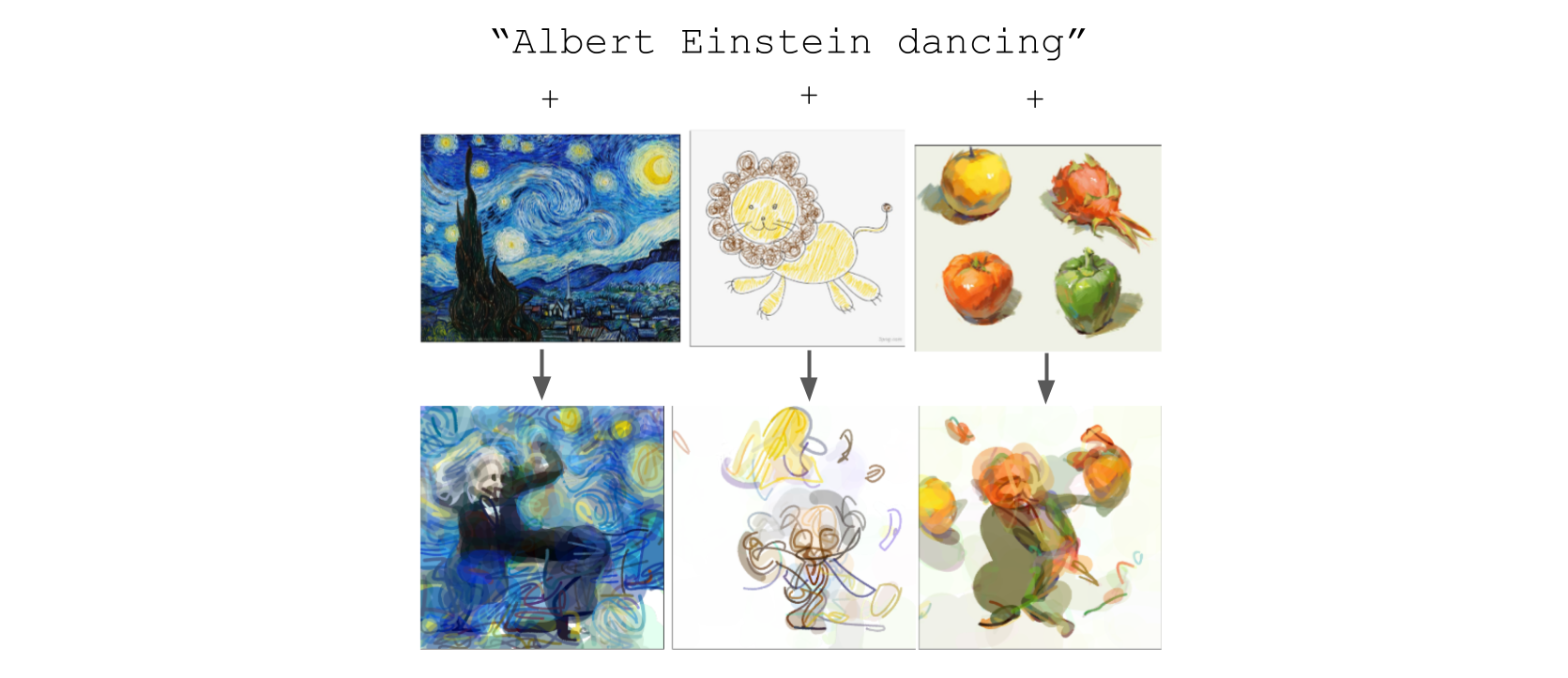

StyleCLIPDraw adds a style loss to the CLIPDraw (Frans et al. 2021) (code) text-to-drawing synthesis model to allow artistic control of the synthesized drawings in addition to control of the content via text. Whereas performing decoupled style transfer on a generated image only affects the texture, our proposed coupled approach is able to capture a style in both texture and shape, suggesting that the style of the drawing is coupled with the drawing process itself.