Explore

Fine-tune FLUX fast

Customize FLUX.1 [dev] with the fast FLUX trainer on Replicate

Train the model to recognize and generate new concepts using a small set of example images, for specific styles, characters, or objects. It's fast (under 2 minutes), cheap (under $2), and gives you a warm, runnable model plus LoRA weights to download.

Featured models

bytedance / seedream-3

A text-to-image model with support for native high-resolution (2K) image generation

bytedance / seedance-1-pro

A pro version of Seedance that offers text-to-video and image-to-video support for 5s or 10s videos, at 480p and 1080p resolution

bytedance / seedance-1-lite

A video generation model that offers text-to-video and image-to-video support for 5s or 10s videos, at 480p and 720p resolution

kwaivgi / kling-v2.1

Use Kling v2.1 to generate 5s and 10s videos in 720p and 1080p resolution from a starting image (image-to-video)

google / veo-3

Sound on: Google’s flagship Veo 3 text to video model, with audio

google / imagen-4-ultra

Use this ultra version of Imagen 4 when quality matters more than speed and cost

replicate / fast-flux-trainer

Train subjects or styles faster than ever

black-forest-labs / flux-kontext-pro

A state-of-the-art text-based image editing model that delivers high-quality outputs with excellent prompt following and consistent results for transforming images through natural language

black-forest-labs / flux-kontext-max

A premium text-based image editing model that delivers maximum performance and improved typography generation for transforming images through natural language prompts

Official models

Official models are always on, maintained, and have predictable pricing.

I want to…

Generate images

Models that generate images from text prompts

Generate videos

Models that create and edit videos

Edit images

Tools for editing images.

Upscale images

Upscaling models that create high-quality images from low-quality images

Generate speech

Convert text to speech

Transcribe speech

Models that convert speech to text

Use LLMs

Models that can understand and generate text

Caption videos

Models that generate text from videos

Make 3D stuff

Models that generate 3D objects, scenes, radiance fields, textures and multi-views.

Restore images

Models that improve or restore images by deblurring, colorization, and removing noise

Generate music

Models to generate and modify music

Caption images

Models that generate text from images

Make videos with Wan2.1

Generate videos with Wan2.1, the fastest and highest quality open-source video generation model.

Use handy tools

Toolbelt-type models for videos and images.

Control image generation

Guide image generation with more than just text. Use edge detection, depth maps, and sketches to get the results you want.

Extract text from images

Optical character recognition (OCR) and text extraction

Chat with images

Ask language models about images

Sing with voices

Voice-to-voice cloning and musical prosody

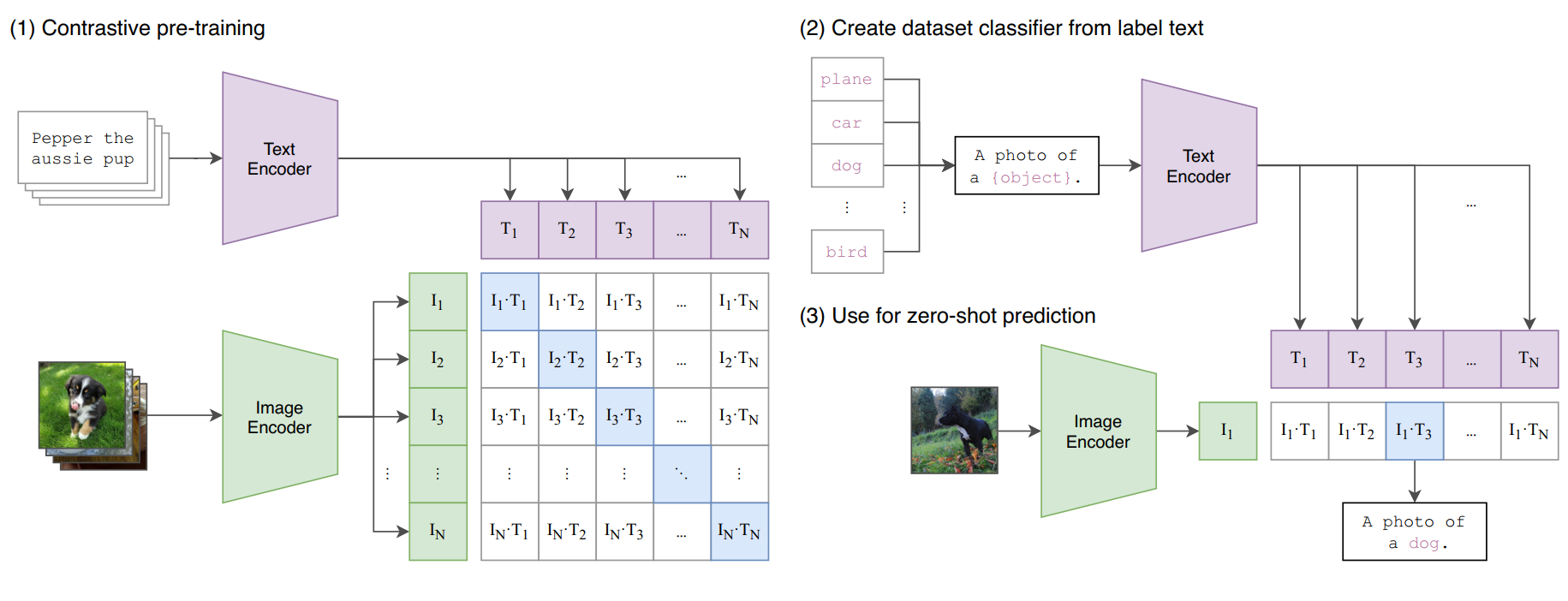

Get embeddings

Models that generate embeddings from inputs

Use a face to make images

Make realistic images of people instantly

Remove backgrounds

Models that remove backgrounds from images and videos

Try for free

Get started with these models without adding a credit card. Whether you're making videos, generating images, or upscaling photos, these are great starting points.

Use the FLUX family of models

The FLUX family of text-to-image models from Black Forest Labs

Use official models

Official models are always on, maintained, and have predictable pricing.

Enhance videos

Models that enhance videos with super-resolution, sound effects, motion capture and other useful production effects.

Detect objects

Models that detect or segment objects in images and videos.

Use FLUX fine-tunes

Browse the diverse range of fine-tunes the community has custom-trained on Replicate

Popular models

SDXL-Lightning by ByteDance: a fast text-to-image model that makes high-quality images in 4 steps

This is the fastest Flux Dev endpoint in the world, contact us for more at pruna.ai

Return CLIP features for the clip-vit-large-patch14 model

Practical face restoration algorithm for *old photos* or *AI-generated faces*

High resolution image Upscaler and Enhancer. Use at ClarityAI.co. A free Magnific alternative. Twitter/X: @philz1337x

Generate CLIP (clip-vit-large-patch14) text & image embeddings

Latest models

The model transforms real-life image to Ghibli Style Art Images.

DeepCoder-14B-Preview is a code reasoning LLM fine-tuned from DeepSeek-R1-Distilled-Qwen-14B using distributed reinforcement learning (RL) to scale up to long context lengths.

Spanish and English Text to Speech model from Canopy Labs (3b-es_it-ft-research_release)

This model is an optimised version of stable-diffusion by stability AI that is 3x faster and 3x cheaper.

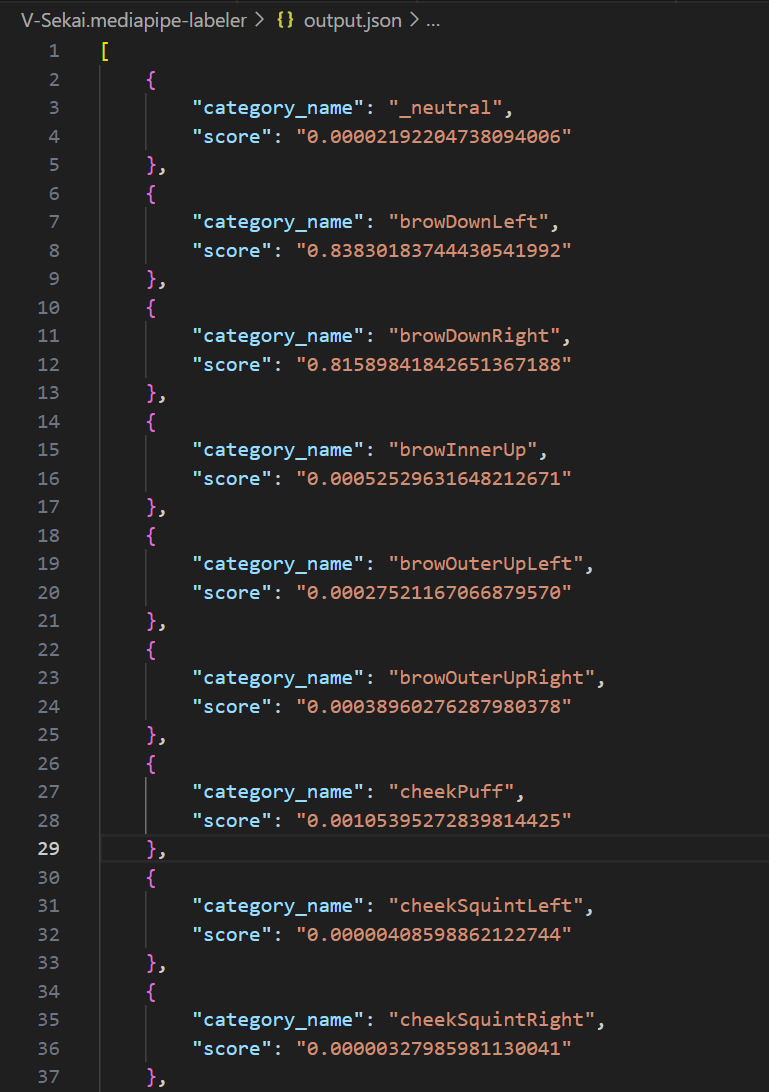

Mediapipe Blendshape Labeler - Predicts the blend shapes of an image.

Fast FLUX DEV -> Flux Controlnet Canny, Controlnet Depth , Controlnet Line Art, Controlnet Upscaler - You can use just one controlnet or All - LORAs: HyperFlex LoRA , Add Details LoRA , Realism LoRA

Qwen2.5-Omni is an end-to-end multimodal model designed to perceive diverse modalities, including text, images, audio, and video, while simultaneously generating text and natural speech responses in a streaming manner.

Generates realistic talking face animations from a portrait image and audio using the CVPR 2025 Sonic model

Transform your portrait photos into any style or setting while preserving your facial identity

Wan 2.1 1.3b Video to Video. Wan is a powerful visual generation model developed by Tongyi Lab of Alibaba Group

Easily create video datasets with auto-captioning for Hunyuan-Video LoRA finetuning

Cost-optimized MMAudio V2 (T4 GPU): Add sound to video using this version running on T4 hardware for lower cost. Synthesizes high-quality audio from video content.

Add sound to video using the MMAudio V2 model. An advanced AI model that synthesizes high-quality audio from video content, enabling seamless video-to-audio transformation.

A redux adapter trained from scratch on Flex.1-alpha, that also works with FLUX.1-dev

Indic Parler-TTS Pretrained is a multilingual Indic extension of Parler-TTS Mini.

SANA-Sprint: One-Step Diffusion with Continuous-Time Consistency Distillation

Open-weight inpainting model for editing and extending images. Guidance-distilled from FLUX.1 Fill [pro].

FLUX1.1 [pro] in ultra and raw modes. Images are up to 4 megapixels. Use raw mode for realism.

Faster, better FLUX Pro. Text-to-image model with excellent image quality, prompt adherence, and output diversity.

State-of-the-art image generation with top of the line prompt following, visual quality, image detail and output diversity.

Professional inpainting and outpainting model with state-of-the-art performance. Edit or extend images with natural, seamless results.

Professional edge-guided image generation. Control structure and composition using Canny edge detection

For the paper "Structured 3D Latents for Scalable and Versatile 3D Generation".

A model Flux.1-dev-Controlnet-Upscaler by www.androcoders.in

Accelerated inference for Wan 2.1 14B text to video, a comprehensive and open suite of video foundation models that pushes the boundaries of video generation.

Accelerated inference for Wan 2.1 14B text to video with high resolution, a comprehensive and open suite of video foundation models that pushes the boundaries of video generation.

Accelerated inference for Wan 2.1 14B image to video, a comprehensive and open suite of video foundation models that pushes the boundaries of video generation.

Accelerated inference for Wan 2.1 14B image to video with high resolution, a comprehensive and open suite of video foundation models that pushes the boundaries of video generation.