Readme

Input Parameter Descriptions

Basic

text: For long prompts, only the first 64 tokens will be used to generate the image.save_as_png: If selected, the image is saved in lossless png format, otherwise jpg.progressive_outputs: Show intermediate outputs while running. This adds less than a second to the run time.seamless: Tile images in token space instead of pixel space. This has the effect of blending the images at the borders.grid_size: Size of the image grid. 5x5 takes about 15 seconds, 9x9 takes about 40 seconds.

Advanced

temperature: High temperature increases the probability of sampling low scoring image tokens.top_k: Each image token is sampled from the top-k scoring tokens.

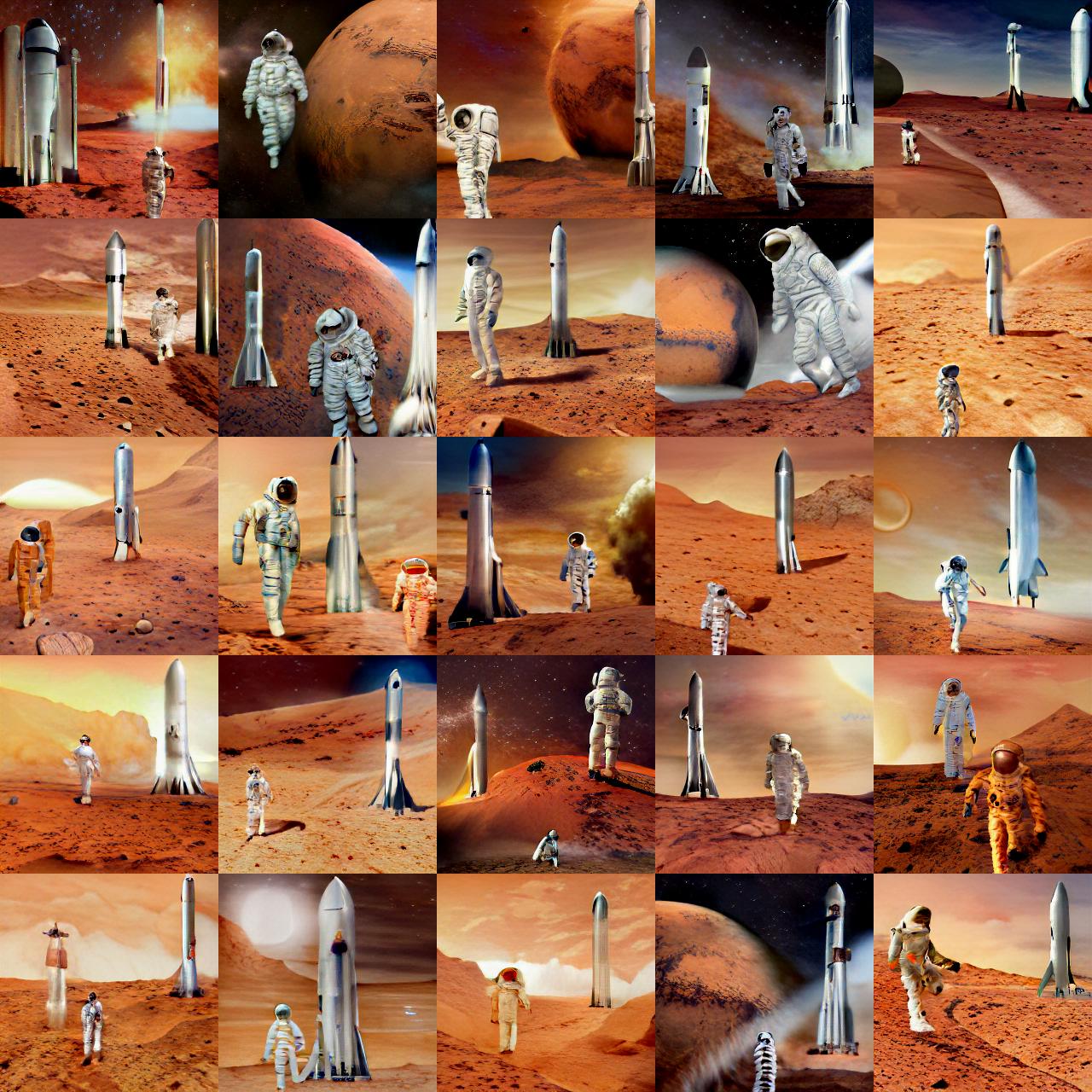

Increasing temperature and/or top_k will increase variety in the generated images at the expense of the images being less coherent. Setting temperature high and top_k low can result in more variety without sacrificing coherence.

Expert

supercondition_factor: Higher values can result in better agreement with the text. Letlogits_condbe the logits computed from the text prompt andlogits_uncondbe the logits computed from an empty text prompt, and letabe the super-condition factor, thenlogits = logits_cond * a + logits_uncond * (1 - a)

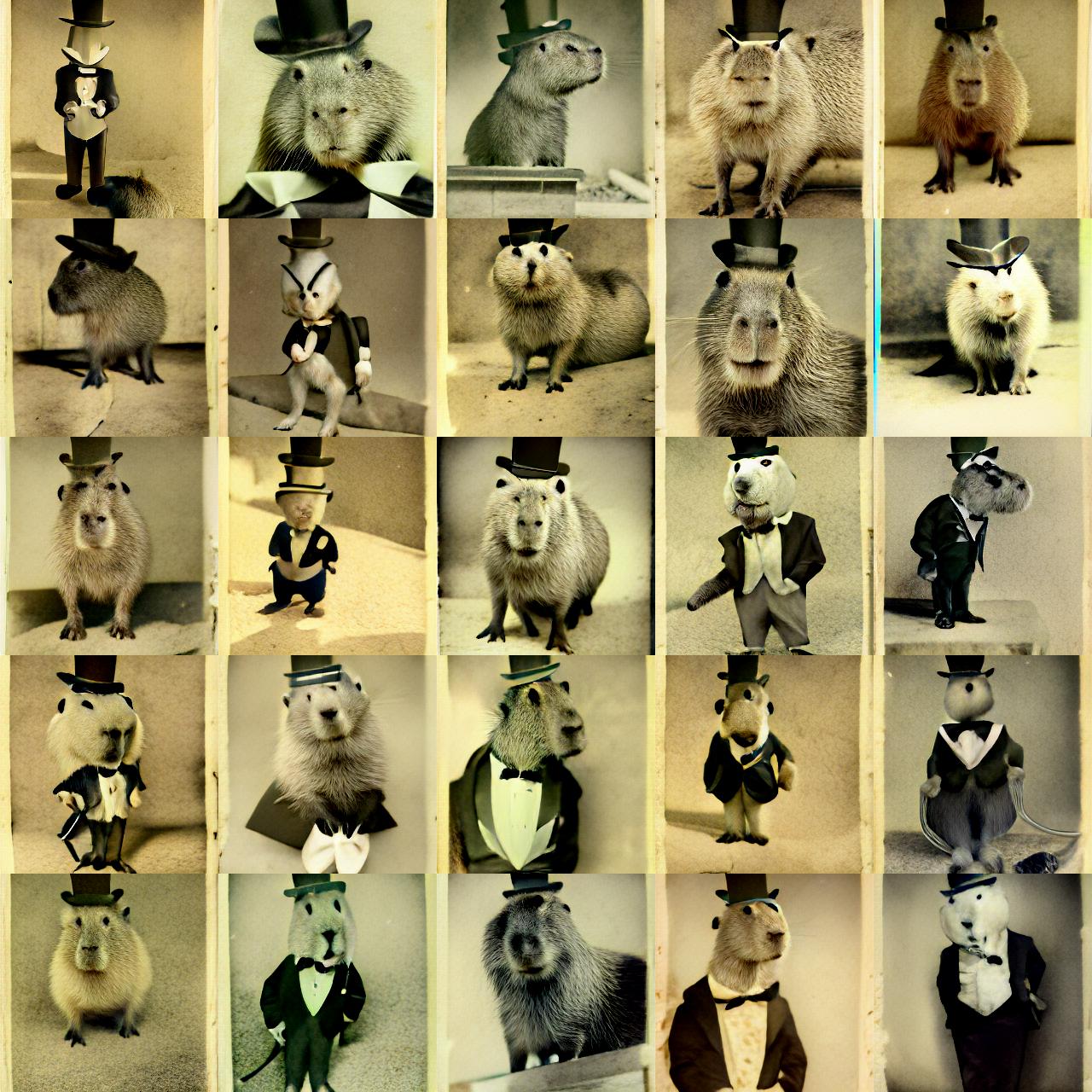

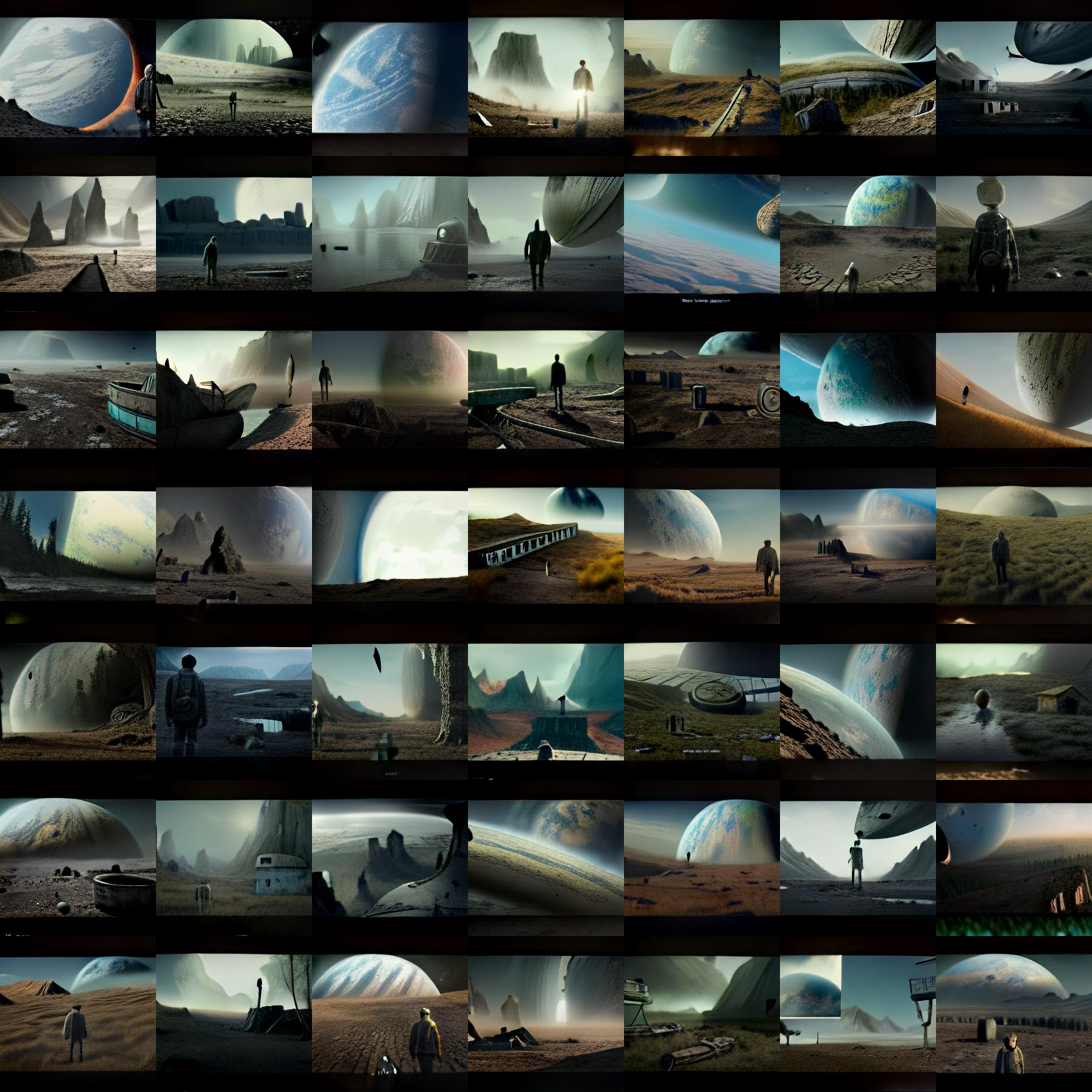

Example

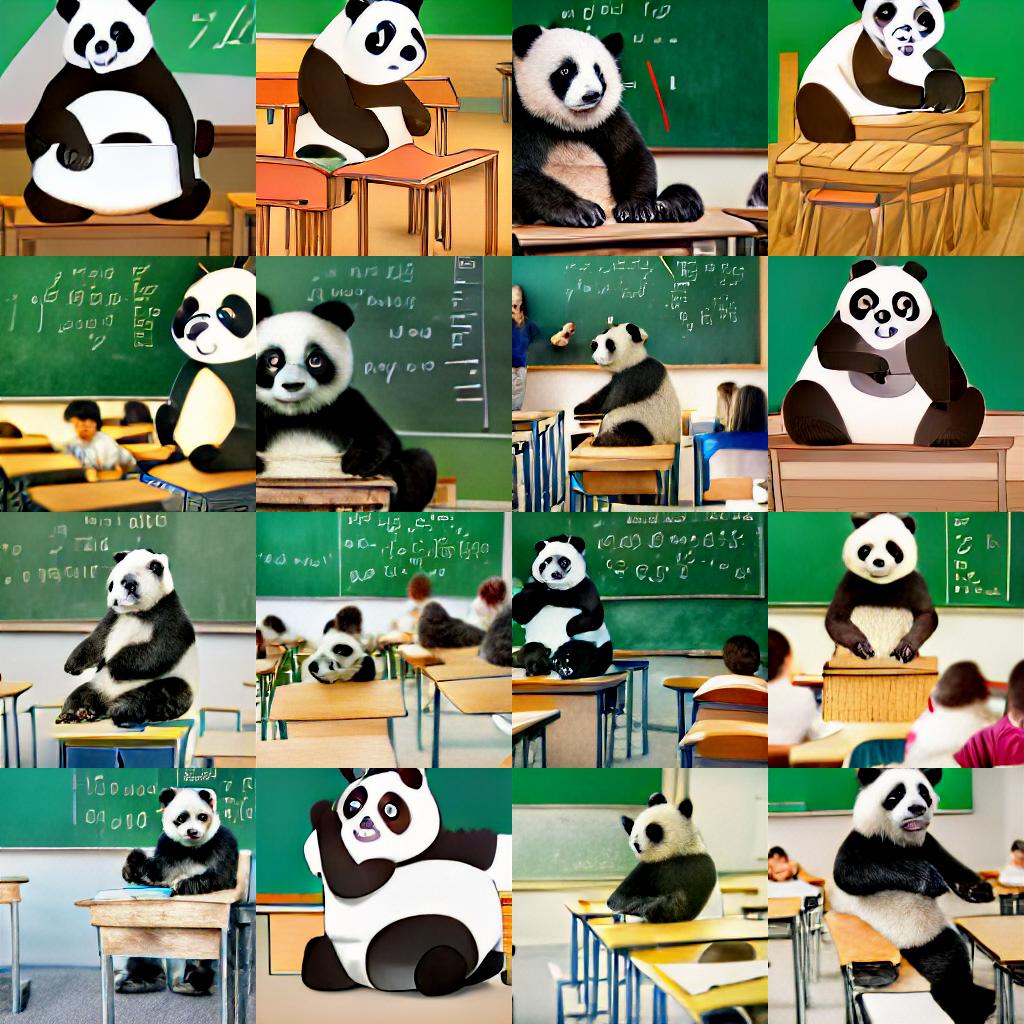

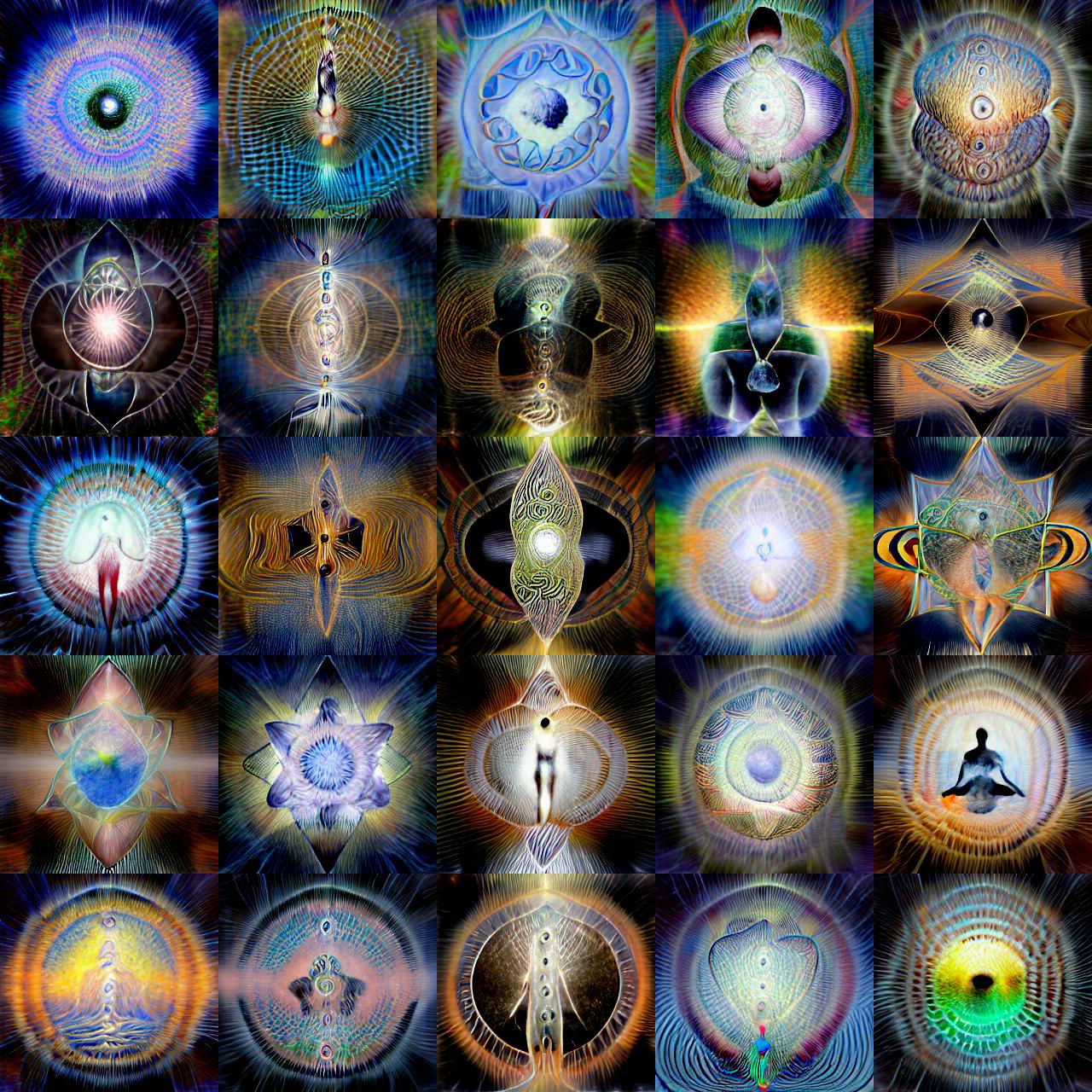

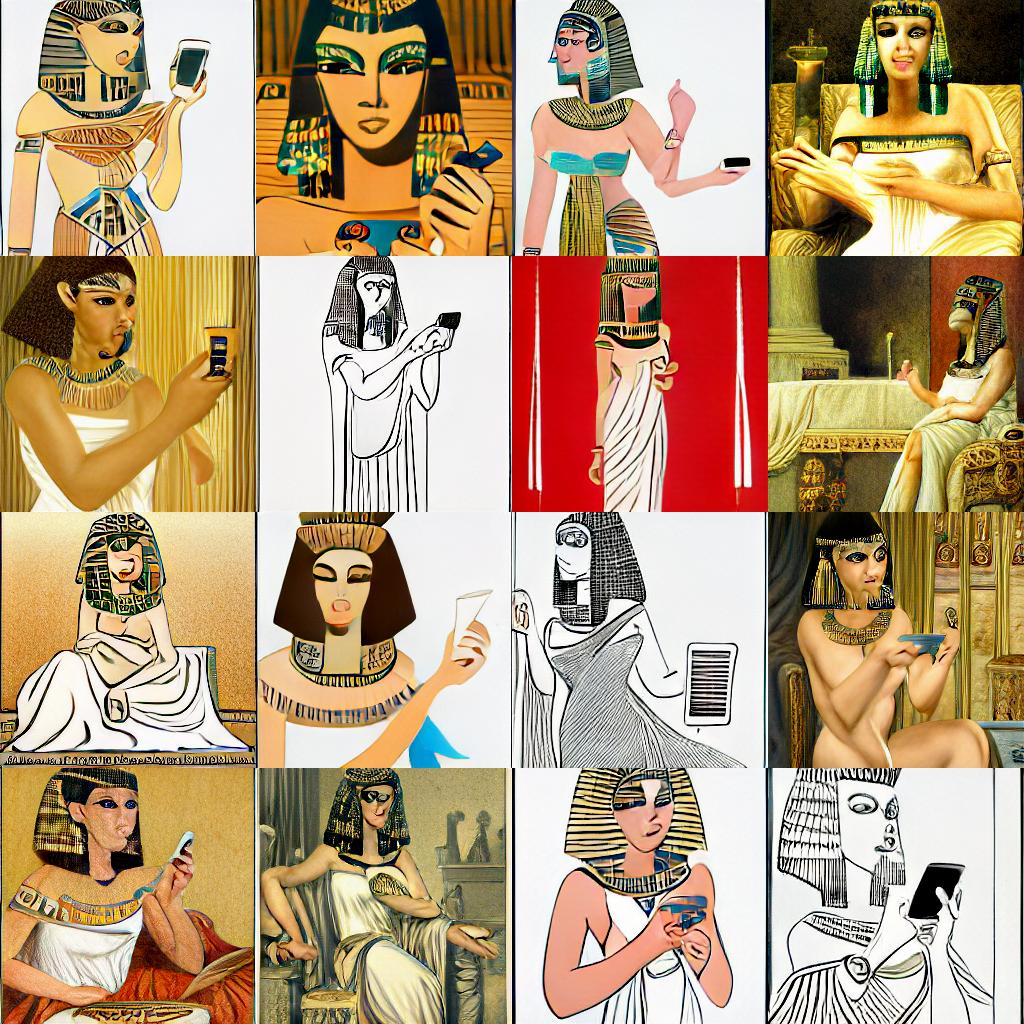

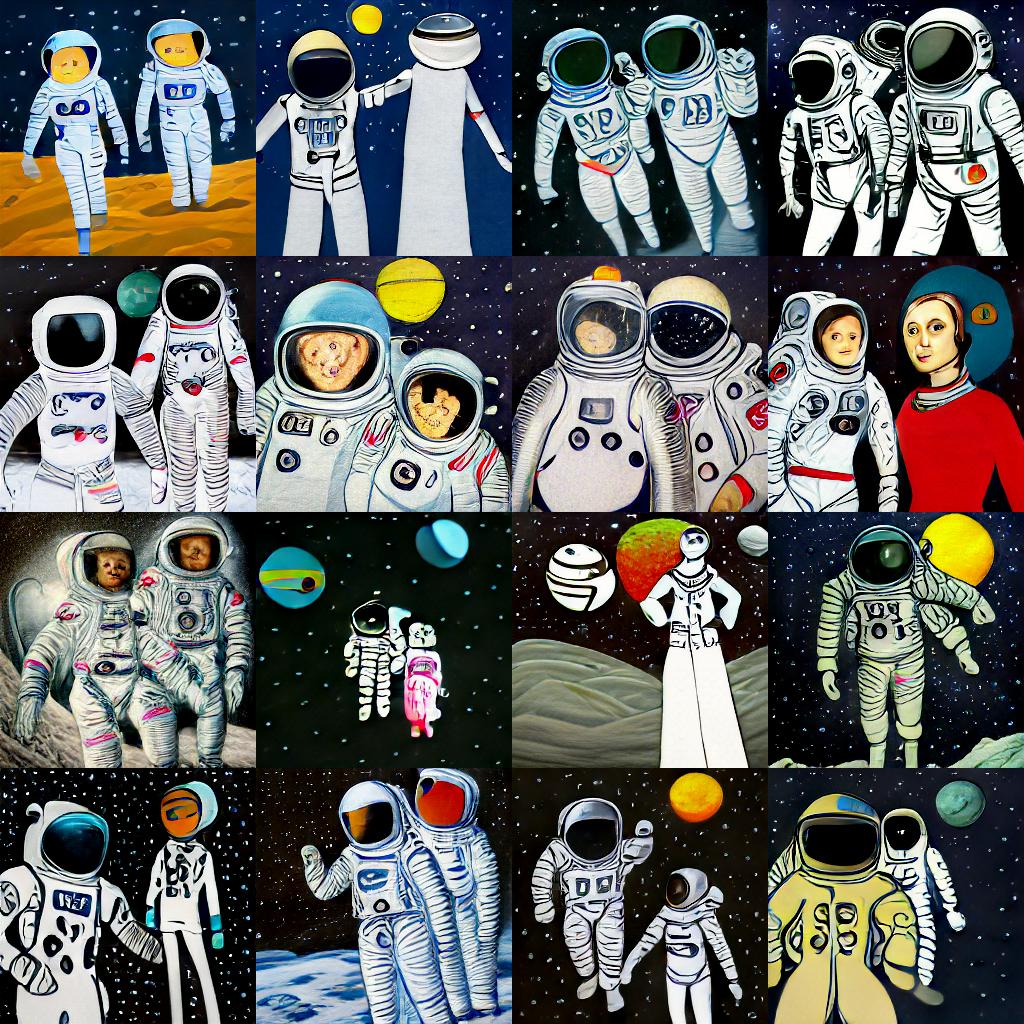

Consider the images generated for “panda with top hat reading a book” with different settings.

text = "panda with top hat reading a book"

temperature = 0.5

top_k = 128

supercondition_factor = 4

text = "panda with top hat reading a book"

temperature = 4

top_k = 64

supercondition_factor = 16